Imaginativo, cada hoja un milagro de edición, más serio de lo que parece; lo he leído al ritmo de 3 ó 4 páginas por día y pronto comenzaré la segunda lectura.

Está editado por Norma; aquí dejo el enlace (incluye un video promocional)

Dos enlaces de este mundo:

Zona Negativa ( que le da un 9 de media!)

Tengas un buen día!

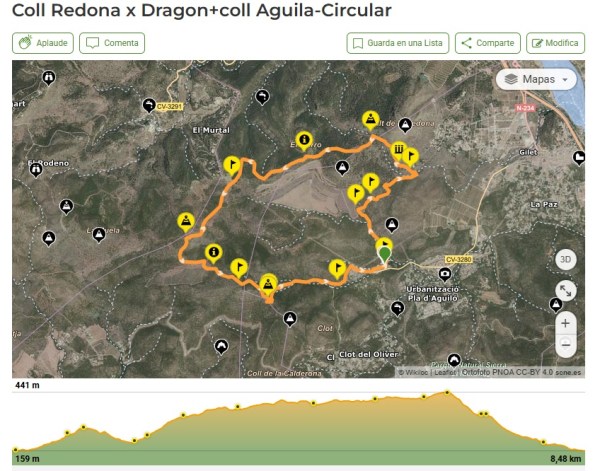

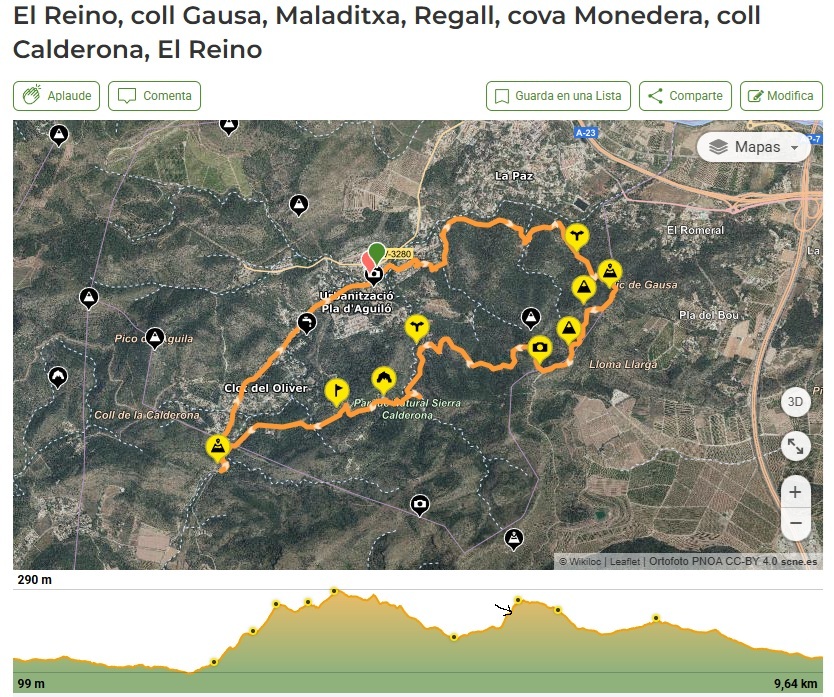

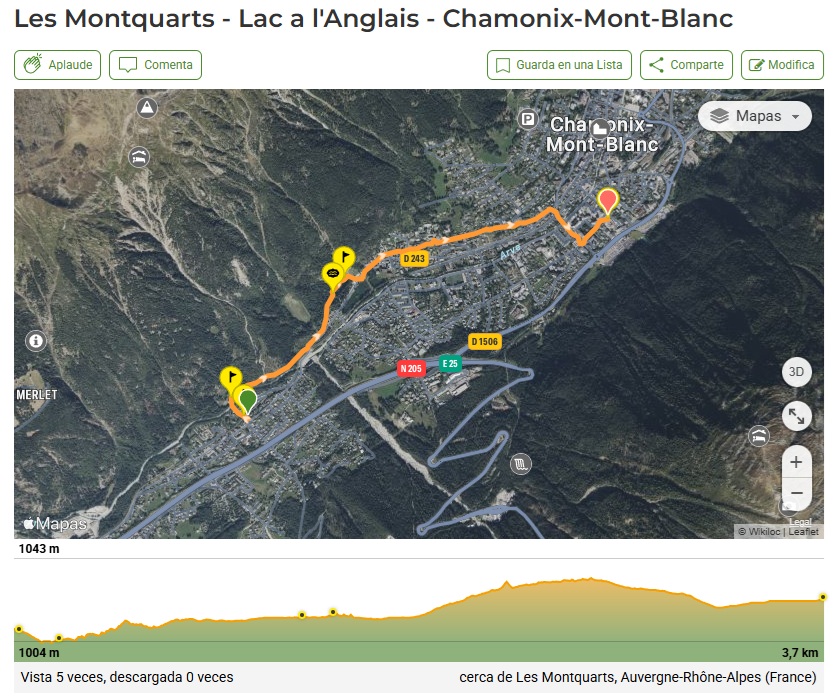

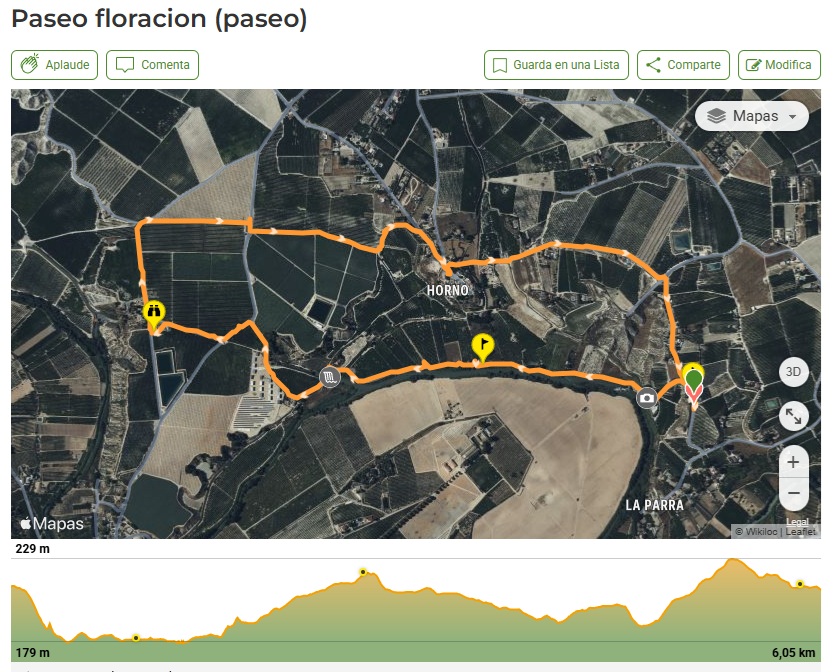

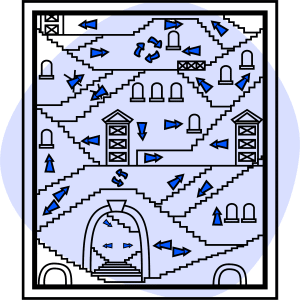

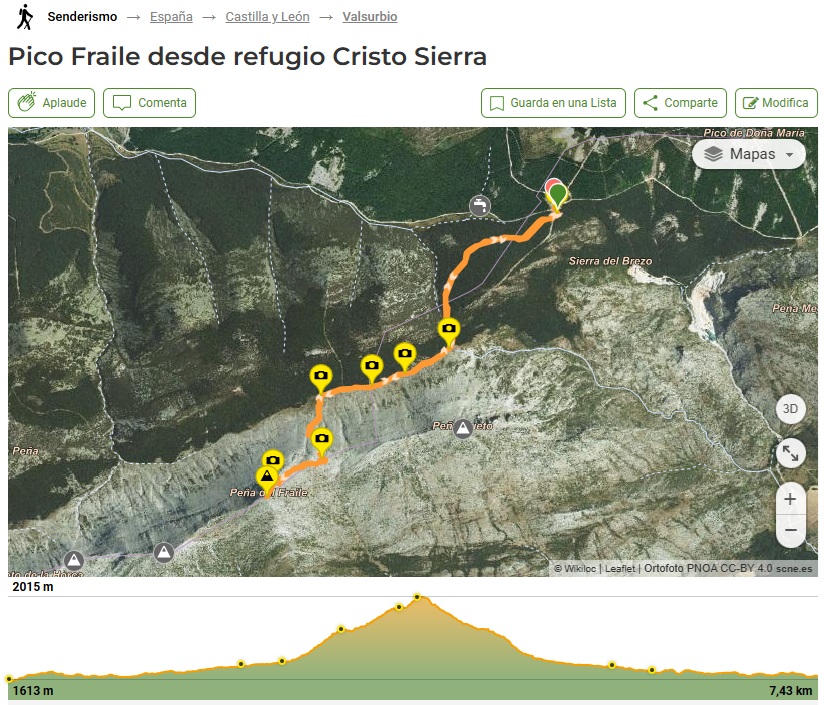

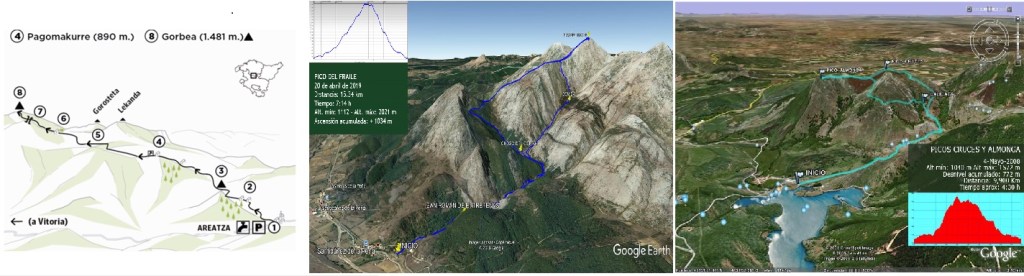

El pasado 28 de Fb, seguimos esta ruta.

Saliendo del Parking del S Espirit en dirección a la Creu del Rodeno, pasando por el Dragón, subiendo al collado de la Redona (a partir de aquí pista). Continuar hacia Xocainet, la Furiona, el Murtal, el coll del Aguila. No subimos a pico alguno; fuimos por los correspondientes collados o la parte baja de los mismos. La bajada desde coll del Aguila, buena, larga, empinada, estrecha… pero bien, sin problema. El tramo desde el Dragón hasta el coll de la Redona, por dónde marca el track, está muy erosionado, terreno descompuesto, desniveles de un par de metros que, aunque los riesgos no son muy elevados, aconsejan ir con «cuidadin», sin prisa y asegurando el paso para evitar resbalones.

Allá, por el siglo pasado…

Dicen en el «rincón educativo»:

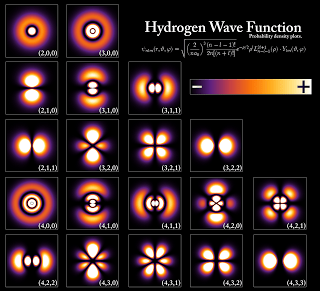

«Se le conoce como “Modelo Cuántico-Ondulatorio” y fue propuesto por Erwin Schrödinger, en 1926, a partir de los estudios de De Broglie, Bohr y Sommerfeld.

Su modelo concibe los electrones como ondulaciones de la materia, es decir, describe el comportamiento ondulatorio del electrón.

Schrödinger sugirió que el movimiento de los electrones en el átomo correspondía a la dualidad onda-partícula y, en consecuencia, los electrones podían moverse alrededor del núcleo como ondas estacionarias.»

El punto de partida de la denominada Mecánica Ondulatoria, desarrollada por Schrödinger, es la onda de materia de de Broglie y la consideración del átomo como un sistema de vibraciones continuas: relación de de Broglie

(Tomado de la web de María Cruz Boscá, URL insertada)

En los cinco minutos que tomaba un café expreso he leído que una persona ha sido asesinada por otras tres armadas con cuchillos y bate de beisbol; en el origen, parece que un tema de ruidos y un comportamiento asocial ya de tiempo

Bien: esto es un fallo del Estado. Si hubo denuncias, ¿Qué hizo para solucionar el problema de convivencia planteado?

Resulta que cuando un profesional hace mal su trabajo, tiene una responsabilidad… también están los periodos de garantía, etc ¿Y que pasa con nuestro flamante Estado?

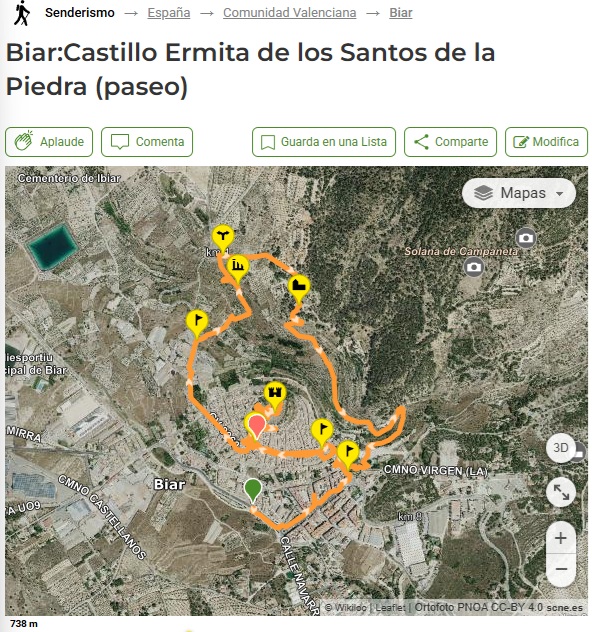

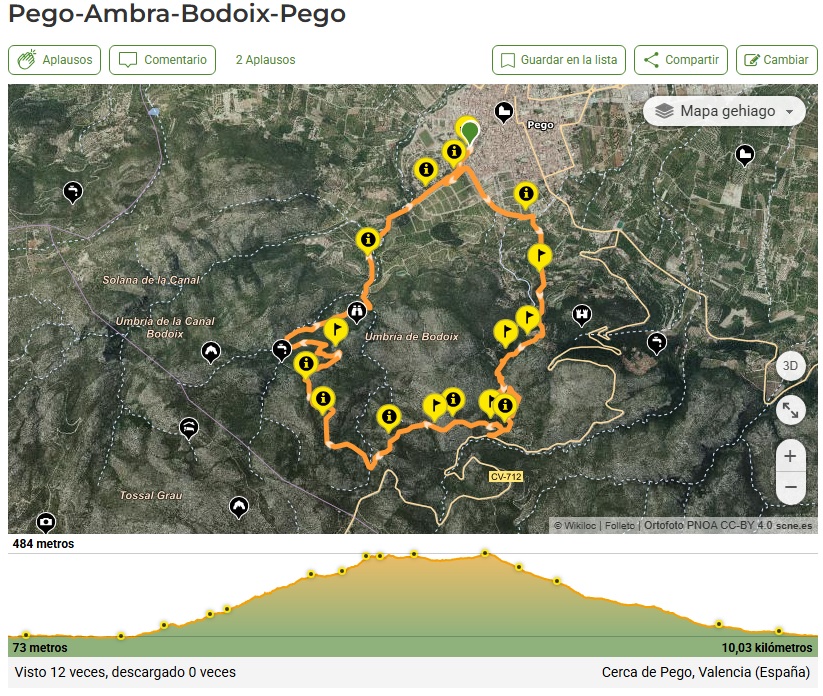

Seguí un track de Moskis, pero sin desviarme, únicamente la circular; es decir no tomando el desvio para Ambra y tampoco el de Bodoix. Aparco en el Passeig Cervantes; diez metros adelante está el paseo del Calvari: es el inicio de ruta y había sitio tb para aparcar.

La ruta es circular, se sale del Calvarí y por él se vuelve. Puede hacerse en los dos sentidos. Durante todo el recorrido, ya en senda, me encontré a una persona con tres perrujos; quiero decir que no estaba muy concurrido.

El corto tramo de lapiaz, cercano a la cima del Bodoix, hace suponer que la corta subida al mismo, se debe hacer larga.

La senda, cuidada y sin problemas. Muy bien. Muy buena impresión Pego.

Un buen día; la primera de este año 2026 (agua, viento… cosas). Tengo intención de hacer 22 ( bueno 21) que no haya seguido y de esta forma cerrar el ciclo de 400 y ¡YA!; cambio de ritmo, otras modalidades (bosques, por ejemplo)… supongo, vete a saber: allá llegaremos que lo veremos!. No obstante, ¡que hermosa, gratificante, emocionante, asequible… actividad!

Los números están aquí ; en términos generales: 10 km, 472 + y 3h 52m

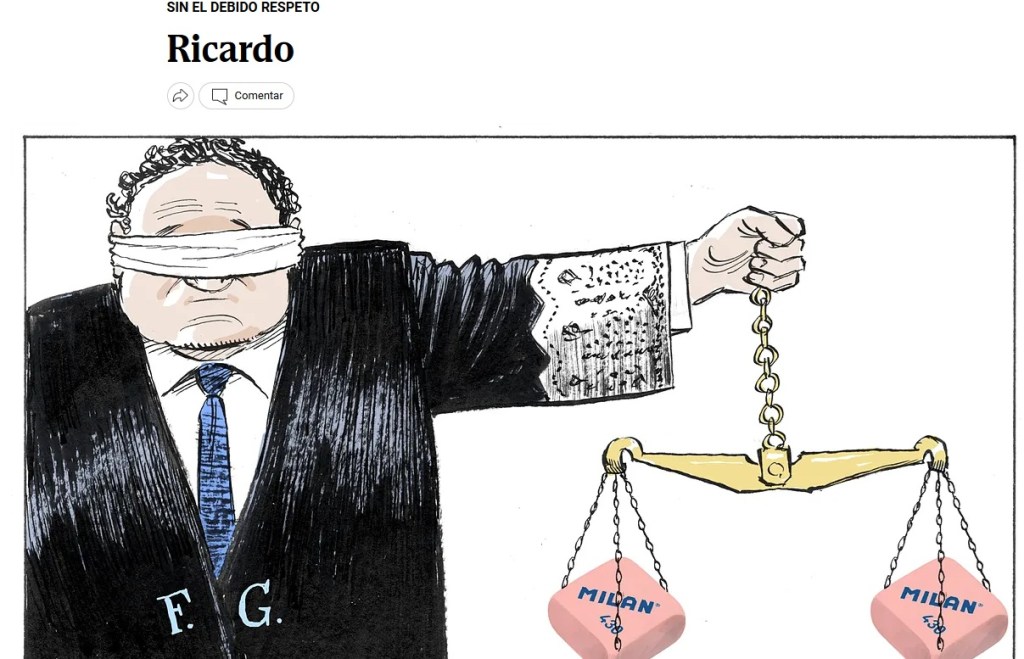

Felipe Gonzalez: NO he oido/leído su última intervención, pero si me enteré de lo del voto en blanco… Muchos de sus compañeros de partido callan y otros muchos le recriminan su abandono de la doctrina verdadera. No me interesa este vodevill. Quiero escribir unas líneas sobre organizaciones con humanos, cuestión sobre la que hube de meditar, buscar información y crear para el proyecto HOSS(-5)

De forma espóntanea, natural, nuestro Sistema de Referencia somos nosotros mismos y nuestro YO que, en líneas generales, en los datos fundamentales pensamos que se mantiene en el tiempo; se requiere reflexión para bañarse en el rio dónde lo más permanente es el nombre que le han puesto. De forma que una tendencia muy humana es opinar que las organizaciones que creamos son «también» inmunes al tiempo. Esto es absolutamente contrario a nuestra experiencia externa: mira el desarrollo de IBM, el de Kodak; mira nuestro propio cuerpo y su ensamble con nuestra mente en ese momento

Felipe no es el mismo que en el año 1982 y la Sociedad tampoco; él opina que el PSOE debería variar su estratégia actual y probablemente perder el poder , pero la actual dirección y la totalidad de sus Diputados/as no quieren perder ese poder en modo alguno y piensan que se adaptan al tiempo actual manteniendo la marca de un partido político de contenido y continente variable, para que cada cuál tome el periodo que mejor le parezca. Dos opiniones.Los dos mantienen la misma marca, el mismo nombre pues permite captar/mantener votos

Pero es doloroso para Felipe irse del PSOE; para otras muchas personas que aún están afiliadas, tambien lo es. Por cierto, ¿a Carrillo lo acabaron echando del PCE?. No recuerdo.¿Dónde está el PCE en la España de 2026?

Resumiendo el resumen: el PSOE del que habla Felipe desapareció y hoy, manteniendo la misma marca, hay otra organización que, de derrota en derrota, aspira a la victoria final; ¿recuperará el marxismo?, ¿propondrá salir de la OTAN?, planteará retirarse de la UE como UK?: yo que sé… eso no lo sabe hoy ni el «puto amo» (aunque lo supiera, ni es el momento ni el modo: el tactismo tiene su tiempo)

No vamos a tardar en ver si el camino hacia la irrelevancia del PSOE tiene buen fin o si, como el Ave Fenix, resurge. También depende de otras formaciones, existentes hoy o no y, obviamente, de que la sociedad que vota en las generales mire al futuro, cierre y supere heridas que NO quiere que se reproduzcan (los periodos de duelo, individuales y colectivos, tiene que acabar algún día)

¿Y el PP?. Por ahí anda. Es una organización humana, de forma que…

Viktoriia Honcharuk, de Wall Street a las trincheras de Ucrania: «No me he arrepentido ni una sola vez»

Escrito por Alberto Rojas (11/2/2026)

«Los clientes que entran a este café en el barrio más exclusivo de Kiev, animados por el zumbido del generador, observan a la chica rubia de uniforme que acaba de llegar de la entrada y se ha sentado al fondo. Su atuendo militar contrasta con los abrigos de piel, las botas de cuero y los sombreros de astracán que las mujeres llevan bajo la nieve.

Cuando lean este texto, Viktoriia Honcharuk , la chica uniformada, estará en Múnich, donde será una de las caras visibles del esfuerzo militar ucraniano ante los políticos europeos en la Conferencia de Seguridad. Con un té negro en la mano, anticipa su mensaje: «Si no están dispuestos a luchar en serio, lo mejor que pueden hacer es aprender ruso» .

Viktoriia, nacida en un pequeño pueblo de Ucrania, tiene 25 años y lleva salvando vidas en primera línea desde el primer año de la invasión. Cuando las tropas Z entraron en el país, ella comenzaba una prometedora carrera en Wall Street con tan solo 22 años. Vivía en Midtown Manhattan y acababa de firmar con el banco de inversión Morgan Stanley , donde su salario crecía gracias a las interminables horas que dedicaba cada día a forjar su futuro en el mundo de las finanzas. El 24 de febrero de 2022, estaba en California cuando su teléfono empezó a recibir cientos de mensajes. Al principio, le costaba entenderlos. Estaba en shock . La guerra había comenzado.

Mi reacción fue física. Tuve que irme de allí y regresar a mi país. Eso les dije a mis compañeros y eso hice. Vine a ayudar, pero no sabía cómo. Cuando tu país lucha por sobrevivir, la comodidad o el miedo ya no sirven como excusa. Me uní a las Fuerzas Armadas y lo primero que hice fue un curso de medicina táctica, algo que desconocía. En diciembre de 2022, estaba en primera línea de fuego», dice Viktoriia, quien aún lleva las mismas gafas con las que aparece en fotos antiguas, vestida de traje, a la entrada de las oficinas del poderoso banco estadounidense.

En pocas semanas, se inmunizó contra el dolor y la conmoción de ver sangre a borbotones, carne humana desmenuzada como pulpa de fruta, quemaduras lacerantes. Esta es la vida que eligió en lugar de su carrera financiera. «No me he arrepentido ni una sola vez» , confirma. Su hermana, que pertenece a una unidad de asalto, lo pasa aún peor, pero ambas ya cuentan con una buena dosis de experiencias extremas: «Al principio de la invasión, formamos una compañía de seis personas, dos chicas y cuatro chicos. Murieron uno a uno, y ahora solo quedamos mi hermana y yo. Tuve que recuperar el cuerpo sin vida de uno de ellos tiempo después de su muerte, cuando pudimos acceder a su cadáver. Fue una experiencia horrible».

La historia de Honcharuk es la de muchos jóvenes ucranianos que se han visto obligados a defender su país e identidad de la agresión del régimen de Vladimir Putin, enfrentándose a la desaparición forzada. «Si muero mañana, no tengo mucho de qué arrepentirme. Viví una buena vida. Contribuí a la comunidad. Si me hubiera quedado en Nueva York, ¿cómo podría mirar a mis futuros hijos a los ojos?» .

En su nuevo mundo, aprendió a evacuar a soldados heridos con una ambulancia improvisada a 800 metros del frente de combate, mientras los militares bromeaban sobre sus futuras prótesis y ella intentaba evitar que se desangraran. «Al principio, no era consciente del impacto de este trabajo. Estabilizabas a alguien, lo evacuabas rápidamente y luego dejabas de saber de él. Ahora trabajo en la Tercera Brigada de Asalto, cuyos heridos son tus compañeros. Meses después de ayudarlos en un momento crítico, los vuelves a ver y comprendes que están vivos porque tus manos les brindaron ayuda decisiva en el momento más difícil», dice. Y concluye con un ejemplo: «Hace poco, un hombre me paró en un supermercado. Se alegró mucho de verme, pero no lo recordaba en absoluto. Me dijo: ‘Me salvaste la vida en Avdivka. Nunca olvidaré tu rostro’. Entonces lo recordé. Por su expresión de sincera gratitud, comprendí lo importante que es lo que hacemos aquí».

Ya ha sufrido tres conmociones cerebrales por explosiones cercanas, pero nunca ha tomado licencia por agotamiento. Para ella, el momento decisivo llegó cuando los drones tomaron el cielo y complicaron su trabajo. Desde 800 metros del frente, se desplazaron a más de 12 kilómetros, lo que alargó los tiempos de evacuación y redujo la esperanza de vida de los soldados, a la vez que complicó su trabajo. «Los riesgos se han vuelto letales, casi insoportables» , dice. Tenía un novio venezolano, así que entiende todas las preguntas que le hacen en español.

Si esta guerra interminable termina algún día, ¿le gustaría volver a su puesto en Morgan Stanley? ¿Cree que sería posible retomar su antigua vida en las finanzas?

El año pasado, tuve la oportunidad de visitar Estados Unidos en misión diplomática y pasé por la oficina. Mi jefe señaló mi antiguo escritorio y dijo: «Mira, ese puesto sigue vacío y te espera» .»

Bueno, en realidad, sacada de encuestas, de un minicálculo y de una pizca de «supuesto olfato», así que: No es tan «mía»

Diputados/as: 67.- Mayoría absoluta: 34

| PP | 29 |

| PSOE | 19 |

| VOX | 12 |

| CHA | 3 |

| TE | 1 |

| S/I/P | 3 |

¿Acertaré ALGUNO de los seis resultados propuestos?. Pues NO!!

Los resultados han sido: PP:26, PSOE:18,VOX:14, CHA:6, AE: 2, S/I/P:1

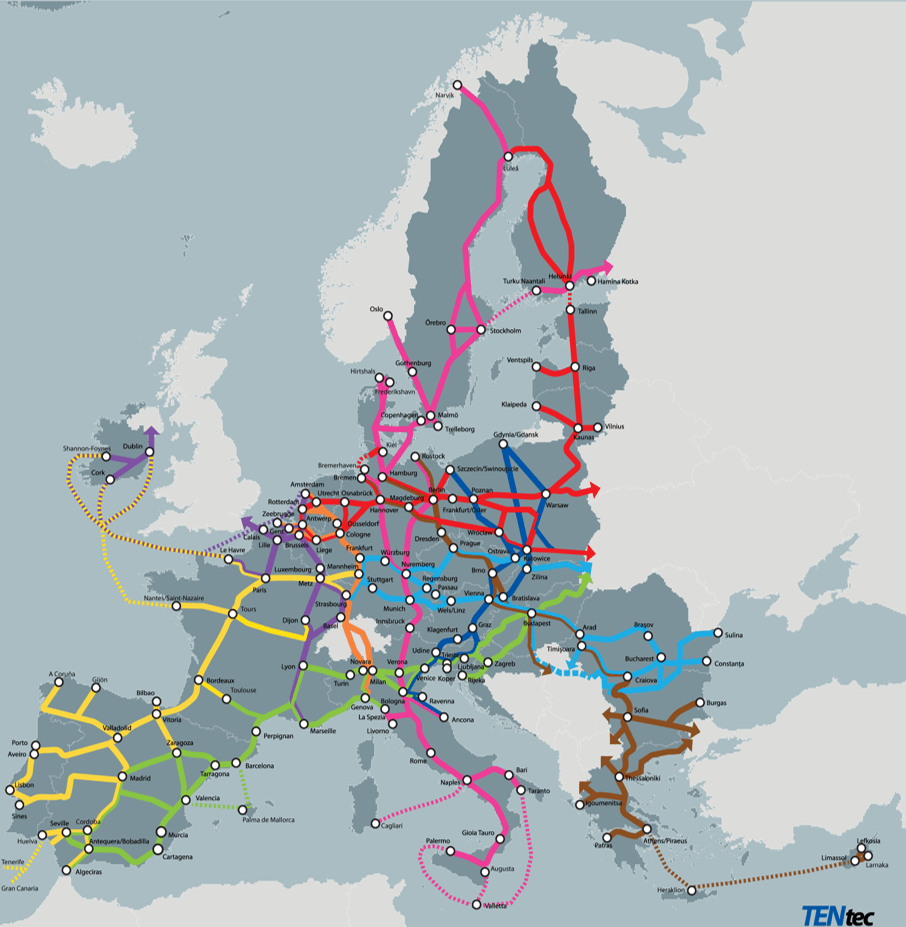

Personalmente, la Unión Europea es un clavo que nos ata a la realidad, un paso más en la acomodación de la organización y la tecnología, una forma de compartir más estrechamente objetivos comunes… Y, para los españolitos, un revulsivo contra las involuciones organizativas (fascismos, comunismos, feudalismos…)

Me encanta el euro, me encanta el apoyo que nos han dado…espero que seamos contribuidores, que ayudemos a otros países que se incorporen a la UE

Me gustaria tener un pasaporte de la Unión Europea, me encantaría tener una Constitución Europea.

Todo lo anterior no implica que sea ciego, la UE tiene carencias muy grandes. Aquí detallo tres debilidades estructurales que son críticas:

1. La unanimidad versus lentitud burocrática (¿Gigante económico, enano político?)

2. Una unión monetaria sin unión fiscal completa (impuestos armonizados comunes, deuda unificada, tesorería común…)

3. Falta de autonomía estratégica (¿Qué decir del «ejercito europeo» o de la autosuficiencia/ balanceo energético?)

Y teniendo estas cuestiones en el tintero durante décadas, sus actuales señorias se ocupan de los tapones de las botellas y últimamente de las monodosis de los restaurantes.

¿Se puede haber perdido más la perspectiva? ¿Se puede desconocer mas el significado de la palabra «priorizar»?

El paso siguiente no va a ser el material con el que estén confeccionados los elasticos de las bragas y calzoncillos (por lo del calentamiento global también): ¡¡noooooooooo!!!, ¿o si?

¿Dónde está el elefante señorias?

Una inteligente amiga de la época de estudiantes en la Universidad publica una artículo en enero del 2026, que comienza citando los sobresaltos que nos da la realidad y cita a Venezuela, Irán, Groenlandia.

Dicen Alberto Mas y Carlos Garcés, en el Mundo, Miércoles, 28 enero 2026 : A punto de cumplirse cuatro años del inicio de la guerra entre Rusia y Ucrania, el número de bajas entre soldados muertos, heridos o desaparecidos rondaría los dos millones, según el Centro de Estudios Estratégicos e Internacionales (CSIS, por sus siglas en inglés).

Venezuela, Irán, Groenlandia; ¿Cómo puede olvidarse de la agresión de Putin y su aparato?; ellos son, sin duda, algo más que un sobresalto.

Cuatro años de guerra sobre el terreno con miles y miles de muertos…¿se olvidan, se ignoran?; pero eso sí: Groenlandia.

Quien dice la verdad a medias, dice la peor MENTIRA.

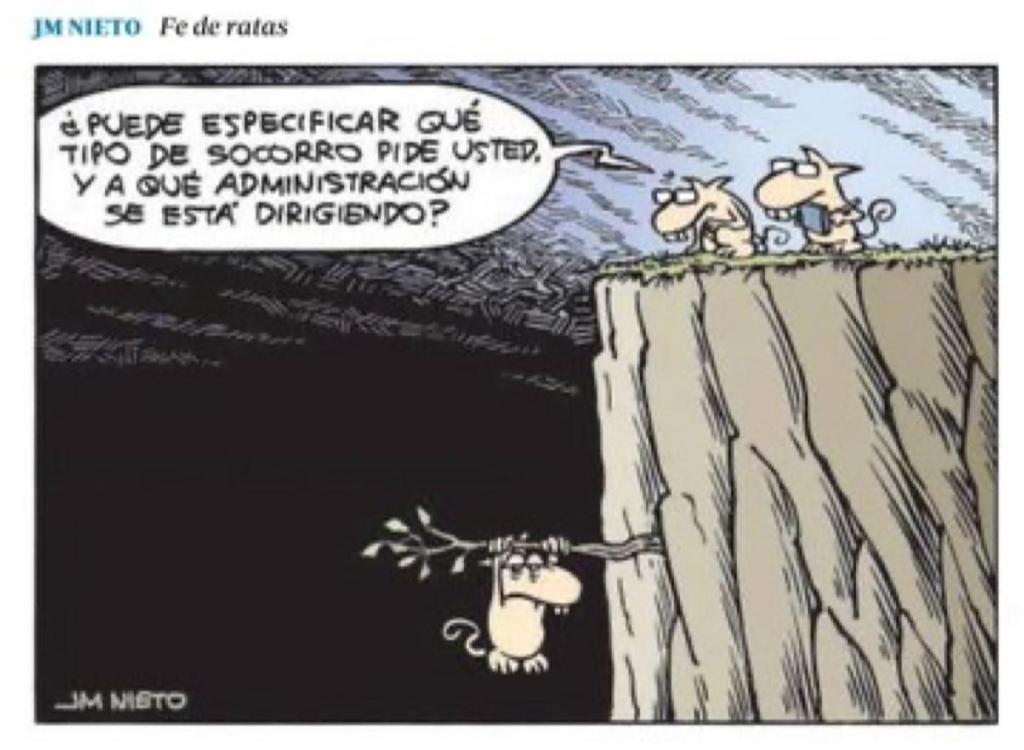

Cuando me refiero a un «colapso organizativo» quiero indicar que la organización de referencia NO sirve para lo que fue creada y es mantenida; nominalmente existe, pero NO cumple sus funciones. Pues bien, tengo la sensación de que el colapso organizativo del Estado, de España, está más cerca… si no estamos ya inmersos en esa etapa.

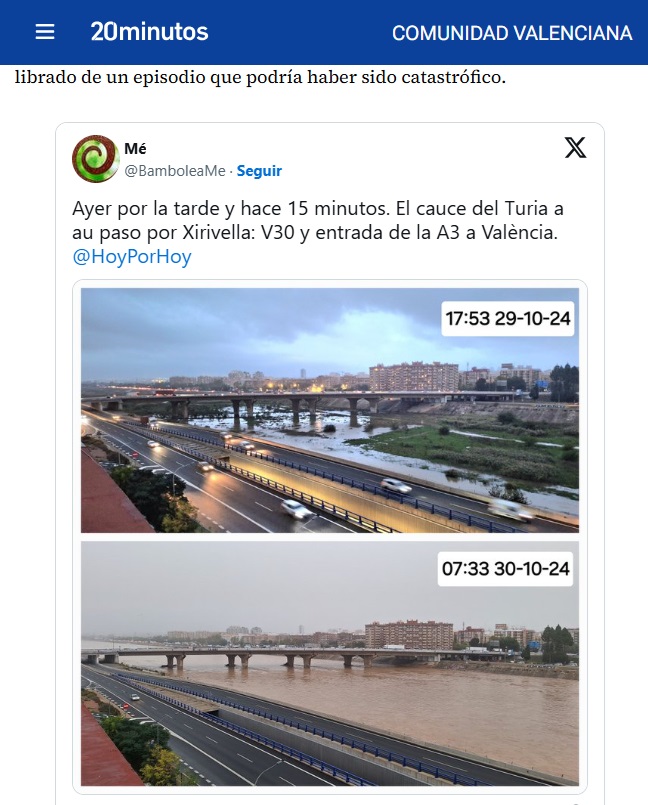

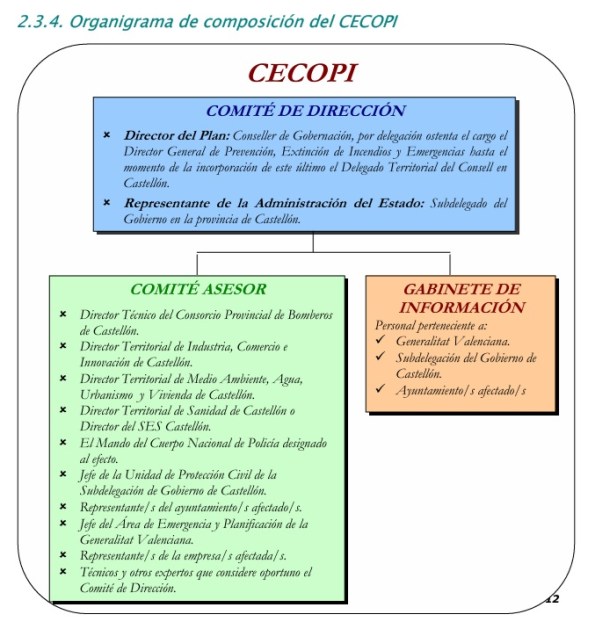

Es la época de las Comisiones de Coordinación tipo CECOPI (Centro de Coordinación de Procedimientos Inutiles); comisiones multitudinarias, multinivel, integradoras …etc: paripé

Puede ser agua, pueden ser trenes, puede ser fuego, puede ser la red de distribución eléctrica, una justicia que tarda años y años, puede ser una dosis mal elaborada o una maquina que no entra por un tunel, puede ser un barco o el último informe PISA…

Hoy esto, mañana aquello

Textos interesantes:

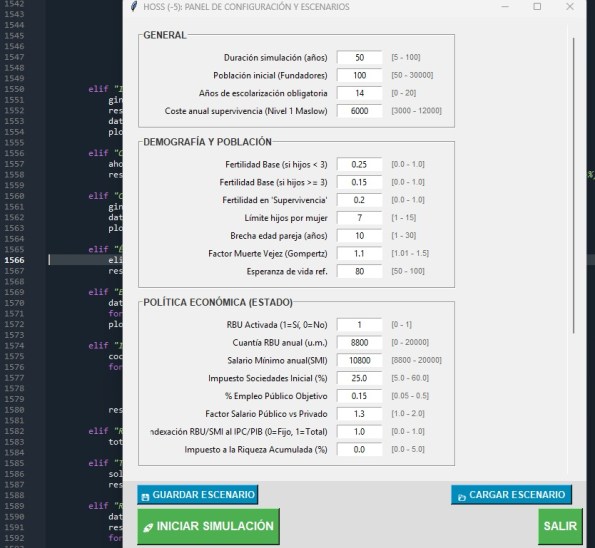

El código está liberado en HitHub bajo licencia MIT (https://github.com/invatresp/HOSS–5-)

# HOSS(-5) · Human Organizations Simulations Start

## 1. ¿Qué es HOSS(-5)?

**HOSS(-5)** es un prototipo avanzado de simulación social desarrollado en Python. Su objetivo es explorar cómo reglas simples, aplicadas a individuos con intencionalidad, generan patrones sociales, económicos y organizativos complejos a lo largo del tiempo.

No pretende predecir sociedades reales. Es un entorno experimental para **comparar escenarios**, observar **regímenes emergentes** y reflexionar sobre el **diseño organizativo de la sociedad** desde una perspectiva ingenieril.

## 2. La pregunta de fondo

HOSS nace de una pregunta sencilla pero ambiciosa:

> *¿Podemos simular cómo se organiza una sociedad humana sin reducir a las personas a meras ecuaciones?*

La sociedad humana combina racionalidad e imprevisibilidad, intencionalidad y azar. HOSS intenta modelar ese espacio intermedio donde las decisiones individuales, los recursos y las reglas colectivas interactúan y producen resultados no triviales.

## 3. Qué hace hoy el código

La versión **HOSS(-5)** implementa una sociedad artificial de pequeña escala donde cada individuo (ph, *punto humano*):

* Nace, se forma, trabaja, consume y desaparece.

* Toma decisiones condicionadas por su psicología, estatus económico e intencionalidad.

* Interactúa indirectamente con otros individuos a través de familias, empresas y Estado.

El sistema permite simular algunos miles de ph() durante decenas de años virtuales y generar grandes volúmenes de datos para su análisis posterior.

## 4. Arquitectura del modelo

### 4.1 El agente: ph (punto humano)

Cada individuo está modelado como un objeto autónomo con:

* **Ciclo de vida:** gestación, nacimiento y muerte (probabilística y sensible a eventos).

* **Psicología:** matriz psicológica (arquetipo × carácter).

* **Decisión:** mapeo en un espacio de intencionalidad y estatus económico.

* **Actividad anual:** trabajo, educación, ocio, organización y consumo.

* **Evolución:** carrera profesional, mejora de ingresos por formación o azar.

### 4.2 Entorno social y macroeconomía

* **Familias, empresas** (10 sectores) y **Estado**.

* Producción y precios mediante reglas simples (no mercado libre pleno).

* Salarios modulados por ~200 categorías profesionales.

* Estado con servicios públicos, empleo y **Renta Básica Universal (RBU)**.

* Ajuste automático anual de impuestos y RBU para buscar equilibrio fiscal.

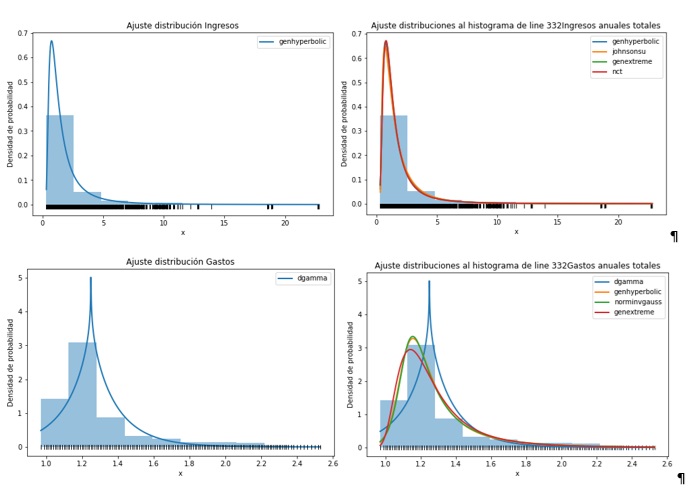

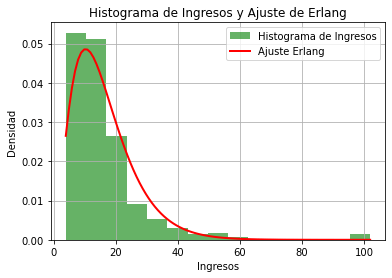

### 4.3 Métricas del sistema

* Población, PIB, ingresos.

* Índice de Gini e IDH.

* Contraste con distribuciones Gamma.

## 5. Arquitectura técnica

* Sustitución completa de MySQL por **estructuras en memoria** (alto rendimiento).

* Persistencia final en archivos binarios, CSV y XLSX para auditoría externa.

* Configuración de escenarios y parámetros desde GUI.

* Versión de depuración sin multiproceso para garantizar consistencia de datos.

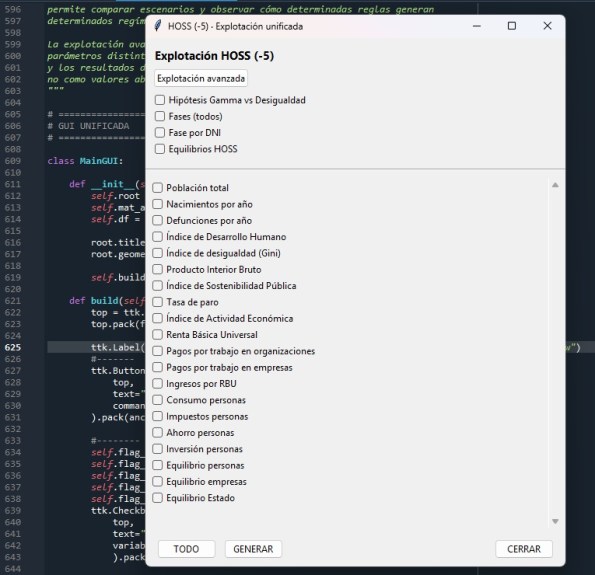

## 6. Explotación y análisis de resultados

HOSS separa estrictamente **simulación y explotación**. El análisis no modifica el modelo ni introduce hipótesis nuevas.

### 6.1 Nivel micro · Espacios de fases

* Trayectorias individuales en espacios de estado.

* Observación de regímenes estables, dispersión y recorridos biográficos.

* No predictivo, exploratorio.

### 6.2 Nivel macro · Series temporales

* Variables agregadas año a año.

* Detección de tendencias, ciclos y cambios de régimen.

* Interpretación como propiedades emergentes del sistema.

### 6.3 Contraste micro–macro · Hipótesis Gamma

* Ajuste anual de distribuciones Gamma sobre ingresos individuales.

* Contraste del parámetro α con Gini e IDH.

* Relación estructural compatible (no causal).

## 7. Qué NO hace (limitaciones actuales)

* No existen relaciones sociales directas complejas entre individuos.

* El mercado es simulado, no dinámico oferta–demanda.

* Las organizaciones son cajas negras (sin protocolos internos detallados).

* No pretende validación empírica directa.

**HOSS(-5) es un prototipo, no una versión final.**

## 8. Relación con el proyecto DOS

HOSS se enmarca en un trabajo más amplio: **Diseño Organizativo de la Sociedad (DOS)**, desarrollado durante más de 15 años a partir de investigación académica, análisis organizativo y reflexión teórica.

La hipótesis central es que el diseño organizativo social puede abordarse con un rigor comparable al del diseño industrial, arquitectónico o de software, integrando modelos, datos y validación posterior.

## 9. Estado del proyecto y futuro

HOSS(-5) no es el final. Conceptualmente, el proyecto necesitaría varias fases más para aproximarse a una versión “CERO” funcional.

Posibles líneas futuras:

En ausencia de falsación, parece interesante dedicar esfuerzos a lograr métricas de las siguientes variables macroscópicas extraidas de los procesos de planificación estratégica de grandes ciudades y Áreas metropolitanas:

* Liderazgo (Lrec, Lgen).

* Temperatura ciudadana (TC) y compromise de los agentes (CAG).

* Organización marco (OM) y desempeño institucional (OT).

* Manejo de la complejidad política y metropolitana (MC).

Este repositorio se publica como **semilla abierta**.

—

## 10. Nota personal del autor

Hoy tengo 76 años. Sigo caminando, pero también cerrando etapas.

HOSS se libera con la esperanza de que alguien, en algún momento, quiera retomarlo, discutirlo, criticarlo y transformarlo.

La versión HOSS(-5) ha sido desarrollada con la colaboración de **Gemini (Google)** y **ChatGPT (OpenAI)**. Las ideas, el marco conceptual y la validación crítica son humanos; la implementación ha sido asistida por IA.

> *“¿Qué pretendo encontrar, internándome en el viento?”*

> — Taneda Santōka

## 11. Enlaces y contacto

Material complementario referido a la versión HOSS(-6) (textos, vídeos y código):

* [Adiós HOSS 1](https://onuglobal.com/2025/04/11/adios-hoss-1/)

* [Adiós HOSS 2](https://onuglobal.com/2025/04/11/adios-hoss-2/)

* [Adiós HOSS 3](https://onuglobal.com/2025/04/11/adios-hoss-3/)

**Contacto:** invatresp@gmail.com

Gracias por leer hasta aquí.

**José Quintás · Gemini · ChatGPT**

DOS es el enfoque general y su porqué; HOSS es una simulación. Dos cuestiones diferentes intimamente relacionadas

¡Cómo pasa el tiempo!.

25 años como un suspiro…¿Cómo impactará la IA en este mundo?

Aquí puedes encontrar el video de celebración; solamente tienes que pulsar «Reproducir video»:

¡A por el Video de celebración !

Estimado amigo,

El Instituto Juan de Mariana presenta la edición 2025 del informe Día de la Deuda.

Esta fecha marca el momento simbólico en que las Administraciones españolas agotan todos sus ingresos anuales y comienzan a financiarse exclusivamente mediante deuda.

La evolución de las finanzas públicas revela una deriva preocupante.

Actualmente, la deuda pública crece en torno a 164 millones de euros al día, lo que equivale a más de 114.000 euros por minuto. El coste anual de financiarla alcanza ya los 39.000 millones. En las dos últimas décadas, la deuda per cápita ha pasado de 9.200 a más de 33.300 euros.

La Seguridad Social concentra el grueso del desequilibrio estructural, con un saldo contributivo negativo superior a los 60.000 millones. Esta situación coexiste con un aparato público sobredimensionado: en 2024 se concedieron más de 41.000 millones en subvenciones y operaron 19.834 entes públicos.

Puedes consultar el informe completo en este enlace: Descargar informe Día de la Deuda 2025 (PDF)

Descargar informe Día de la Deuda 2025 (PDF)

Principales hallazgos del informe:

- Déficit estructural persistente: España cerró 2024 con un déficit del 3,16% del PIB y una deuda del 101,8%. El desequilibrio se concentra en la Administración Central y la Seguridad Social, cuya situación se sostiene artificialmente mediante transferencias estatales.

- Sistema de pensiones en riesgo: Uno de cada cuatro euros que paga la Seguridad Social se financia con déficit. El gasto en pensiones ya absorbe el 35% de los ingresos fiscales y podría alcanzar el 47% en 2050, desplazando otras prioridades presupuestarias.

- Evolución preocupante de la deuda: En solo dos décadas, España ha duplicado su ratio deuda/PIB, protagonizando el mayor incremento entre las principales economías de la UE. Esta aparente estabilidad tras la pandemia se explica por el crecimiento nominal del PIB, no por una consolidación fiscal real.

- Coste creciente de los intereses: La factura por intereses ya representa el 2,4% del PIB, lo que reduce el margen para políticas públicas esenciales. Se prevé que esta cifra aumente hasta el 2,9% en los próximos años.

- Esfuerzo tributario desviado: Los intereses consumen cerca del 83% de lo recaudado por el Impuesto de Sociedades, el 38% del IVA, el 27% del IRPF y casi una quinta parte de las cotizaciones sociales.

- Deuda intergeneracional: La deuda por habitante se ha triplicado desde 2004, superando la media de la Unión Europea. Es una muestra clara del coste trasladado a las generaciones futuras.

- Gasto creciente pese a ingresos récord: En 2024, la recaudación alcanzó el 42,3% del PIB, pero el gasto fue del 45,4%. España encadena déficits desde 2008, señal de un problema de exceso de gasto, no de falta de ingresos.

- Débil disciplina fiscal: Entre 2004 y 2024, España solo cumplió alrededor del 35% de las normas fiscales europeas. Desde 2023 opera con presupuestos prorrogados, y la ejecución de 2025 ya supera ampliamente los niveles establecidos en esas cuentas.

- Situación de la Seguridad Social: Acumula 126.000 millones en deuda y un patrimonio neto negativo próximo a los –98.526 millones. Desde 2005, el Estado ha transferido más de 400.000 millones para mantener el sistema de pensiones.

- Desglose del Día de la Deuda por administraciones:

- Estado: 10 de noviembre

- Seguridad Social: 10 de diciembre

- Comunidades Autónomas: 28 de diciembre

- Entidades Locales: 31 de diciembre

Sin transferencias, el sistema de pensiones agotaría sus recursos el 3 de octubre.

- El Reloj de la Deuda 2025: La deuda crece a un ritmo de 6,8 millones por hora, ~114.000 euros por minuto y ~1.900 por segundo.

- Hay margen de ajuste: Informes de eficiencia administrativa estiman un potencial de ahorro de hasta 60.000 millones anuales. El reto pasa por auditar, evaluar por resultados y desmontar estructuras de gasto improductivo y clientelar.

Instituto Juan de Mariana

Supongo que usted pronunció esta frase textualmente pues está entrecomillada. Bueno, le doy mi opinión aunque, obviamente, no me la haya pedido.

Veo difícil que se cumpla su deseo pues el Partido Socialista Obrero Español (PSOE) ha sido sustituido de forma indolora y cumplienso su dinámica interna en la organización denominada «Pedro Sánchez Obrero Español» que responde a las mismas siglas (PSOE)

Es decir, el PSOE que usted añora, simplemente NO existe; otra cosa es que puedan resucitarlo y que no les salga un Frankenstein. Las organizaciones Sr Page, tienen su tiempo, el Partido Comunista, Falange Española…existen y, para mi, está bien que existan pero, sinceramente, no me preocupan a nivel electoral.

Usted plantea volver a la dinámica anterior; por ejemplo hacer autocrítica. El PSOE actual tiene capacidad crítica frente a lo que no es él y sus socios actuales; también manifiesta esa capacidad crítica frente a algunas personas que hacen autocrítica (Felipe, Guerra, Lambán, usted…), pero opino que la autocrítica no es una de las cualidades de esta organización

El «Pedro Sánchez OE», no tiene líneas rojas que no pueda saltarse para estar en el lado correcto de la Historia; eso quiere decir que sus diputados NO tienen esas líneas rojas tampoco. Observe el caso reciente de los diputados por Extremadura que votaron a favor de cierre de nucleares, para salir diciendo que esa minucia no debe generar preocupación pues, en ese territorio, NO se les va a aplicar lo que han ayudado a aprobar. Increible.

No conozco a diputado alguno del Psoe que se sienta incómodo por:

- Sube la inflación, hay crisis de vivienda, el paro juvenil está erre que erre…Pero, ¿que pasa?, si somos el motor de Europa

- ¿Ha habido cambios notorios de posición?: hagamos de la necesidad virtud

- Después de un revés viene un nuevo revés… ¡Vaya!: más fontanería!!! Más liderazgo!!! Más asumir responsabilidades en la espalda de otro (por ej, si viste toga)!!!. Más maniobras, más bandera democrática, antifascista y progresista

Los únicos que pueden tener algún reparo son los votantes del este PSOE, no necesariamente afiliados al PSOE, ¿tendrán líneas rojas?. No lo se

¿Cuántos se habrán planteado no votar a Pedro Sánchez SI:

- Consigue tres años con los presupuestos prorrogados (Por fa, escuche la moción de censura a Rajoy)

- Media docena de ministros/as «lideran» las respectivas CCAA?

- La inflación repunta y pasa el 3%

- Si la UE muestra dudas sobre la gestión del Gobierno frente a los medios de comunicación, el CIS, la independencia del poder judicial…

- La agenda judicial escala y escala…

Así las cosas, los electores tendrán la palabra, cuando Pedro Sánchez convoque y, actualmente, él no ve motivo alguno hasta el 2027; por supuesto, ERC, JUNTS, PNV, Bildu no ven motivo para convocar elecciones EN ABSOLUTO

¿Qué quiero decir?. El partido de Padre Sánchez ganará o se hundirá con Pedro Sánchez.

Por cierto, a fecha de hoy veo claro que el candidato del PSOE para las próximas elecciones, será Pedro Sánchez y el candidato por el PSOE para Castilla La Mancha, no tengo claro que sea usted.

Por cierto, dada la parálisis actual, los casos en juicio (aunque sean de gente desconocida en lo personal por nuestro Presidente) y demás asuntos (las próximas elecciones autonómicas irán dado tendencia.), soy de la opinión de adelantar la convocatoria de elecciones generales, para que se celebren allá por Mayo del 2026, por ejemplo.

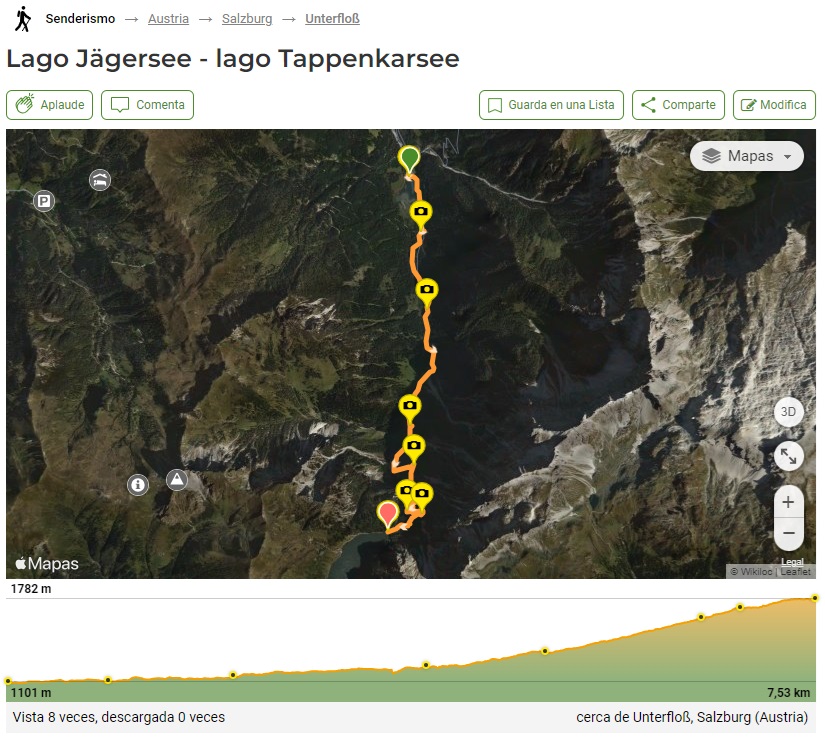

Los datos están aquí, en Wikilocs

El tramo desde el desvío a la Cueva Monedera, es corto, pero muy intenso; hay varios factores que lo hacen un «pelín extremo»: no hay «z», terreno descompuesto y tramos de más de +20%. Vas entre vegetación, en fin, aunque se rodara no creo se llegara lejos…un par de metros quizás… si es bajando, un poco más…pero en esta ruta es subiendo.

Por lo demás, un día excelente y la comida en El Reino tb

Ayer tuve que ir a zona del Peset y tome un café con leche aquí. Nunca había entrado, no conocía de su existencia: La Paca

Un local limpio, luminoso, bien «puesto», con un buen café y por debajo del precio «normal», personal amable, con ofertas puntuales y una buena carta de bocatas/tapas para un almuerzo con los/as amigos

«El niño 44», según «Sensacine»:

«Basada en la novela homónima de Tom Rob Smith ambientada en la Rusia de la Unión Soviética. Leo Stepanovich Demidov es un agente pro-Stalin del servicio secreto que decide investigar una serie de asesinatos de niños, en una país donde supuestamente este tipo de crímenes no existen. El estado no quiere ver la realidad y en vez de intentar capturar al criminal, decide ocultar el caso y exiliar al policía. Pero Leo no pasa por alto la injusta decisión y sopesando la peligrosidad del caso decide, con la ayuda de su mujer, continuar con la investigación hasta dar con el culpable.

El libro está a su vez inspirado en Andrei Chikatilo, un asesino en serie que en 20 años mató a más de 52 personas, en su mayoría niños. Además refleja cómo eran en la época el sistema educativo, los orfanatos y el trato a los homosexuales en la URSS. etc»

Vi esta peli hace tiempo

Leí la noticia de las estadísticas de la ertzaintza sobre el origen de sus detenidos: el 63% origen extranjero.

¿Qué motiva este post?. Los comentarios de algún periodista y de algún político, «progresistas» ambos, que NO ven con buenos ojos esa publicación numérica pues contraviene la ideología oficial de lo que han dado en autollamar «progresismo». De la misma forma que en el paraíso del padrecito Stalin NO podía existir el asesinato ( los del propio Estado de la URSS tendrían otra denominación o categoría), para tales profesionales es obvio que Si la realidad NO está de acuerdo con su ideología, queda una solución «científica»: ocultar los datos

Hay mucha (muchísima) distancia entre el régimen de Stalin y el «régimen actual en España», ¡pero algunos apuntan maneras …!

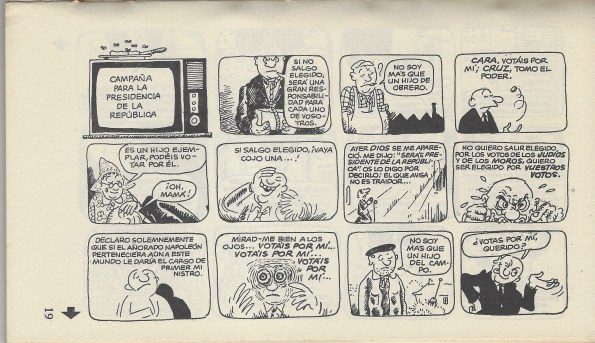

Tomo este gif del blog de Tom Perez:

https://historiacontemporanea-tomperez.blogspot.com/2021

Recomiendo esta peli que, al menos, se toma las cosas con humor:

Y por si alguien piensa que «exagero», he aquí una nota referida a Marlaska, que recoge el Correo:

Hay para «mosquearse»

En noviembre del 2025 ví esta pintada cerca del Hospital de la Malvarrosa

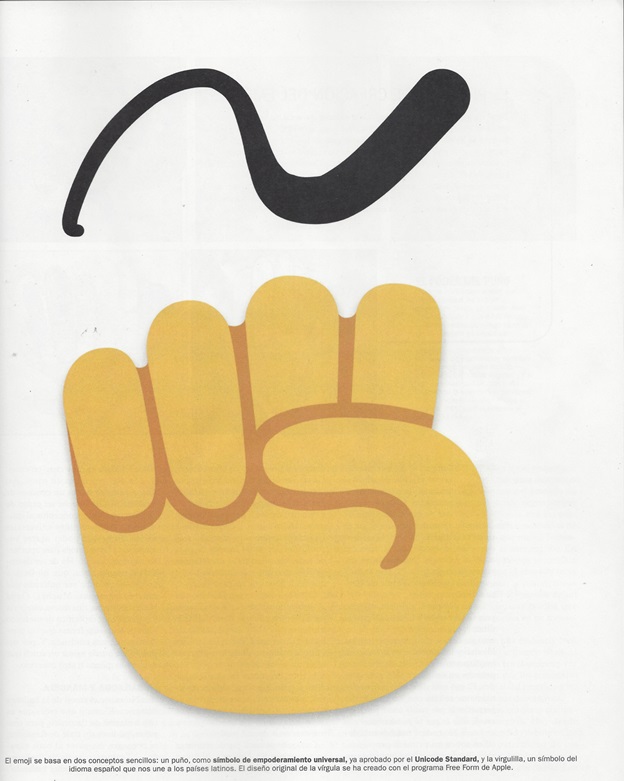

Me llamó tanto la atención que la fotografié y analizo ahora.

A sabiendas de que puedo equivocarme, no en mis opiniones (que son las que son) si no en alguna suposición que haga… Vamos allá

1º-Es una persona que tiene algo que decir y lo pinta; aventuro que es joven. No escribe en mayúsculas, no grita, no se mete con nadie, sólo lo dice. No se por qué no pone la «d» a «salu»… tiene una «r» curiosa.

2º-Tiene la suficiente capacidad mental como para priorizar lo más importante para ella, como persona: Familia, salud y libertad. Esta trilogía puede colocarse de 6 maneras (permutaciones sin repetición). Quiero decir que, aún compartiendo la trilogía no existiría unanimidad; incluso ella misma podría alterar el orden dadas determinadas circunstancias.

3º-Su posición económica es suficientemente desahogada como para que no aparezca esa preocupación específicamente; con lo que tiene se apaña.

Me agrada esta persona desconocida. Tenga larga vida y pienso que la conducirá muy bien, dentro de su mundo y sus circunstancias

Nota.- Las priorizaciones que pueden hacerse con esos tres elementos, exageradas, exacerbadas… darían lugar a seis opciones «políticas» que podrían ponerse, con las debidas escisiones, en ocho o diez formaciones enfrentadas, para enfrentarse y odiarse cada día un poquito más. ¡Esto es ciencia ficción!. ¡Esto no ocurre!

PixelCuántico hace referencia a su canal en YouTube; no tengo más datos, pero el video que inserto en este post habla por si sólo de su conocimiento, de su manejo, de su claridad expositiva y de su tarea educativa-divulgativa. De Alessio Mangoni, únicamente conozco este texto que, para los amantes del papel, puede ser útil tener a mano.

Este video de PixelCuántico pienso que es una obra de arte, algo difícilmente superable, un trabajo de maestros en el sentido más noble de la palabra.

Después de ver el video de corrido, es decir, sin pausarlo y sin utilizar elementos auxiliares (como papel y lapiz, por ej), he llegado a la conclusión de que, además de mis limitaciones, 50 años no pasan en vano (devolveré los apuntes del Análisis funcional y espacios de Hilbert, del prof F.J. Yndurain, a mi biblioteca de recuerdos)

Parece que estas matemáticas están implementadas, todo» o parte, en Python más numpy. Por ejemplo, en deepnote encontré:

«Python Methods for Dirac Notation

Its useful for us to consider how we might code quantum states represented in Dirac notation as well as some of the mathematical operations one can apply to states.

Let’s start with a constructor method for a Ket. This method should take in two complex numbers as input, and return a column vector. This can be accomplished quickly with:

def Ket(a,b):

return np.array([[a],[b]])

A Bra constructor is a bit more difficult because it could have two possible inputs.

- We could give a Bra constructor two complex numbers, and have it output a row vector.

- We could give a Bra constructor a Ket, and have it output the corresponding row vector.

We can accomplish this duality with an if statement.

def Bra(a,b=None):

if b==None:

return (a.conjugate()).transpose()

else:

return np.array([[a,b]])

Note the use of «None». If we only pass one thing to the Bra constructor, that thing must be a Ket, so just perform the conjugate transpose. If we pass two things into the Bra constructor, then those two things must be individual complex numbers. «

No obstante lo anterior, pienso que puede trabajarse sobre el papel con la notación de Dirac y, cuando llega el momento del cálculo, utilizar Python e importar las herramientas precisas

Comparado el tamaño «inicio» de aplicación de la Mecánica cuántica con el de un ser humano de 1,7 metros de altura, este es unas 1027 mayor. ¿Cuántas veces es mayor el Universo que un ser humano?. Bueno pues unas 1026 veces mayor.

Parecemos partículas que pensamos; pienso que nos van mejor los Racionales que los Imaginarios, así que la función elegida para los bra se quedaría sin «conjugado» y un poca «traspuesta»

Quería expresar mi opinión de que el verdadero fascismo está entre los actuales grupos violentos, que son alentados desde el poder político, se llaman «antifascistas»

Pero leí este artículo que copio y pego y con el que estoy básicamente de acuerdo:

«David Mejía. Actualizado Domingo, 2 noviembre 2025 – 00:07

En los años de juventud y vulnerabilidad, me preguntaba por qué los antifascistas no se hacían llamar sencillamente demócratas. Al fin y al cabo, ser antifascista es condición necesaria del demócrata, pero no suficiente. Si la intención es defender la democracia, ¿por qué arriesgarse a ser confundido con un totalitario de otra casta? Pol Pot también era antifascista.

Pronto entendí que nadie ha ligado nunca presumiendo de demócrata. La radicalidad es más atractiva y funciona mucho mejor en los bares. Presumir de demócrata es como presumir de ser auxiliar administrativo o de reducir la velocidad en las curvas. Hay que reconocer que, cuando asoman los skinheads, no es muy tranquilizador escuchar: «Calma, están llegando los demócratas». En cambio, la palabra «antifascista» rezuma fuerza, riesgo y compromiso.

Una cosa que me sorprendía de los antifascistas es que llamaban «fascistas» a los muchachos de polo y náuticos, y «chavales» a las hordas de encapuchados que lanzaban piedras a la policía, quemaban contenedores y boicoteaban las conferencias de Fernando Savater. (Ven, otra cosa que no puedes hacer declarándote demócrata es boicotear charlas de Fernando Savater. Son todo limitaciones).

Un día comprendí que el antifascista nunca se declararía demócrata porque perdería, además del glamur, su bien más preciado: la licencia para ejercer la violencia. El antifa puede pegar, acosar, destruir o boicotear sólo con decir que tal cual acto, tal o cual presencia, promueve, beneficia, blanquea o alienta el fascismo. Entendí que en democracia el antifascismo no es más que un pretexto para ejercer la violencia política. Y por eso, que el propósito de los antifascistas no era protegernos del fascismo, sino de la democracia.

El periodista José Ismael Martínez recibió una paliza, muy antifascista, en Pamplona. Los tontos y tontas habituales han celebrado el acto de resistencia de los «chavales», y desde el Gobierno -que detuvo su agenda para condenar los cánticos del colegio mayor Elías Ahuja- sólo ha habido silencio. Mientras dependa de los apoyos de Bildu y Podemos, deberán de transigir con lo que hagan sus cachorros. Si su continuidad exige tolerar la violencia, lo hará. Y cuanto más débil se sienta, más partidario será de convertir espacios comunes en escenarios de lucha e intimidación.»

Y si, el Gobierno ha mostrado sus cartas claramente:

«desde el Gobierno -que detuvo su agenda para condenar los cánticos del colegio mayor Elías Ahuja- sólo ha habido silencio. Mientras dependa de los apoyos de Bildu y Podemos, deberán de transigir con lo que hagan sus cachorros. Si su continuidad exige tolerar la violencia, lo hará. Y cuanto más débil se sienta, más partidario será de convertir espacios comunes en escenarios de lucha e intimidación.»

No se olviden de esta frase por favor:

«Y cuanto más débil se sienta, más partidario será de convertir espacios comunes en escenarios de lucha e intimidación»

Así fue.

Era un gran valle del Pirineo enclavado en Aragón que desconocía; pues ya no

No tengo ganas de escribir ni de novelar; así que va breve.

He ido a finales de octubre (2025), en Valencia 22 grados y 12 en Hecho

Cosas:

No siendo fin de semana, muchos restaurantes y bares están cerrados en esta temporada ; a partir de las 8, los que pasean por la calle (pocos) pues, probablemente, somos foraneos

Bonito pueblo; ¿Cómo saber más? Visita guiada de Hecho: síguela; Gemma es una guía muy profesional. Por cierto, su visita guiada a la “corona de los muertos” es muy interesante, entretenida y en la selva de Oza

Alojamiento: estuve en Lo Foratón; 45€/noche, habitación doble de uso individual. Comidas/cenas caseras (en torno a los 15€). Calefacción se enciende a eso de las 6,30 hasta las 9 y a partir de las 18 hasta las 12 aprox. Trato agradable, ningún problema

Si necesitas un equipo de montaña de urgencia, hay una tienda de deportes allí mismo

Siresa, a dos Km, a visitar; la iglesia románica es interesante: habla del paso de los Pirineos por el puerto del Palo. En Youtube puedes encontrar información sobre la citada Iglesia

Hay bastantes rutas de diferentes niveles

Es un valle amplio

Sugerencias

La Boca del infierno puede hacerse más segura con un par de semáforos o el establecimiento de un horario. ¿Qué sentido tiene permitir la doble circulación en un tramo de 4 Km con zonas en las que se avisa que tienen 3,5 metros (los coches suelen rondar los 1,8 y 2 metros de ancho)?

Hay rutas que adolecen de indicaciones; por ej: la sierra de los Rios tiene un poste informativo a la altura del puerto de Ansó, ¿Por qué no lo tiene en el desvío abajo, casi en el valle? ¿Dos hitos pequeños sin indicación alguna son suficientes?.

A pesar de todas las razones en contra, hay senderistas solitarios; en los hoteles puede arbitrarse un servicio de oficio, consistente en dejar en el mostrador una simple hoja, en la que voluntariamente pueda ponerse el núm de la habitación y la ruta del día (si tiene un problema y no regresa, se sabe dónde buscar aproximadamente); estamos hablando de 365 hojas al año (menos de 5 €/año)

Las obras.

Cualquiera que haya ido de Huesca hacia arriba, sabe que las comunicaciones actuales no tiene nada que ver con las de hace años; pues bien, Sabiñánigo y Jaca, ahora se llevan la palma ( ya llevan años, pero ¡¡es que el terreno se las trae!!). Hay que contar con un tramo de obra continua a 40 Km/h, dónde el GPS no sirve y donde, además de las señales hay que utilizar la intuición (no es muy difícil acertar)

Y yo que hice?

1º-Vi Hecho

2º-Seguí dos visitas guiadas

3º-Vi Siresa

4º-Gabardito

¿Por qué no fui a Acherito, a aguas Tuertas?. Sinceramente: por no pasar por la boca del Infierno más veces (con tres marchas atrás ya tuve bastante). ¿Y cómo es que van autobuses con el gálibo adecuado? ¿Y camiones de ganado? ¿Y furgonetas grandes?: pues porque los que están enfrente ejercitan marcha atrás (Un ancho de 3,5 da para lo que da: por un lado roca, por el otro “quitamiedos”). Por cierto, además hay senderistas, familias incluidas con sus niños, que van por la “Boca” pues es un bello lugar. ¡P’abernos_matao!. A pesar de lo descrito hay quien lo pasa como si nada, quien va y viene y no encuentra a nadie… pues si, así es. Pero pienso que es una situación a solucionar

Entre Ordesa, Añisclo, Pineta, Tena, Benasque, Chistau, Aragón, Bujaruelo, Ara, Hecho y Ansó…¿Con cuál me quedaría para repetir y repetir?. Un par de ellos o tres. Sin olvidar, la montaña palentina (Cervera del Pisuerga), los pueblos blancos (Capileira…)…etc

Hay que asumir que, en esto del senderismo, como en la gran mayoría de cuestiones y en contra del buenismo al uso: estamos limitados (unas personas más, otras menos)

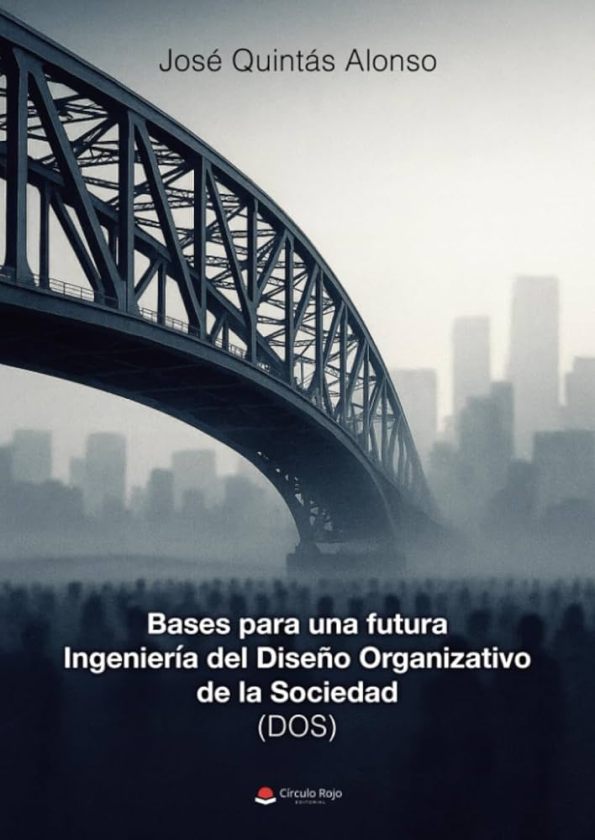

Nota editorial .

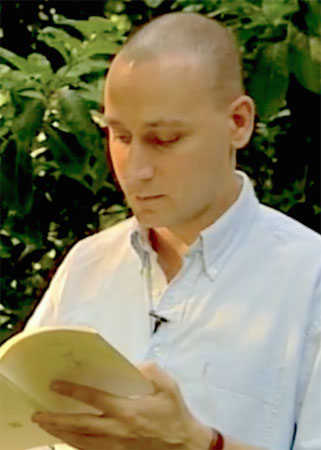

Libro: Bases para una futura Ingeniería del Diseño Organizativo de la Sociedad (DOS)

Autor: José Quintás Alonso

Editorial: Círculo Rojo (2025)

Disponible:

👉 Amazon

Entrevista a José Quintás Alonso, autor de “Bases para una futura Ingeniería del Diseño Organizativo de la Sociedad (DOS)”

Pregunta. ¿De dónde surge la idea de aplicar la ingeniería al diseño de la sociedad?

Respuesta. Ha sido un proceso personal que abarca unos 30 años en los que se compatibilizan el trabajo, la familia, las organizaciones, la observación, el estudio, la investigación, las vacaciones… Sé algo de diseñar software, y esa actividad es contraria a la improvisación, a los dogmas, al interés personal como objetivo primordial: usamos métodos, datos y pruebas. Mi propuesta es llevar el rigor de la ingeniería al terreno de las decisiones colectivas, sin dogmas, con herramientas que podamos medir, validar y mejorar. En ese proceso conocí la obra de J. W. Forrester, pionero absoluto en señalar esta necesidad.

Pregunta. ¿En qué se diferencia esto de las viejas “ingenierías sociales” o de las utopías?

Respuesta. En que no parte de ideologías cerradas, sino de métodos contrastables. No promete paraísos; propone procesos: formular hipótesis, modelar, simular escenarios, pilotar a pequeña escala, evaluar resultados y corregir. Es lo contrario del “plan perfecto”, de la “verdad absoluta”, del “camino de la Historia”: es aprendizaje iterativo con control de daños.

Pregunta. ¿Qué significa “verificar y validar” decisiones públicas o empresariales?

Respuesta. Lo mismo que en ingeniería: verificar que el diseño implementa lo que dice el modelo y validar que ese diseño funciona en la realidad según los objetivos y restricciones. Puede aplicarse a una ley, a una política urbana o a una planta industrial. Si no pasa esas pruebas, se ajusta o se descarta. En este sentido, los procesos de planificación estratégica de grandes ciudades y áreas metropolitanas aportan valiosas experiencias (incluido, a veces, el desdén de quienes no lideran el proceso en ese momento).

Pregunta. ¿Qué papel juegan los datos y los modelos computacionales?

Respuesta. Central. Combinamos métodos deductivos, inductivos y abductivos, y usamos modelos para simular decisiones antes de ejecutarlas. Un ejemplo podría ser HOSS (Human Organizations Simulation Start). En cuanto a HOSS, debo decir que soy un lego en Python; por tanto, habrá centenares de cuestiones mejorables. Además, según mis cuentas está en la versión “-6” y pienso que debe analizarse nuevamente, incorporar lo aprendido y comenzar de nuevo, usando tensores, Numpy y Keras.

Pregunta. ¿Y la inteligencia artificial? ¿Qué aporta realmente?

Respuesta. La IA no decide por nosotros; nos ayuda a pensar mejor. La uso para chequear coherencias, sintetizar información y explorar escenarios más rápido. Lo resumo con ironía: “al menos ya somos dos los que pensamos que esta propuesta es viable”. La clave es humano + IA, cada cual en lo que mejor hace. Me he sentido muy cómodo al trabajar con ChatGPT de OpenAI.

Pregunta. ¿A quién va dirigido el libro?

Respuesta. A un público doble. Primero, a cualquier lector interesado en cómo organizar mejor la vida colectiva; explico el enfoque con lenguaje claro. Segundo, a técnicos y equipos (ingeniería, economía, sociología computacional, policy) que quieran comenzar a construir.

Pregunta. ¿Qué gana una universidad o empresa si adopta este enfoque?

Respuesta. Menos improvisación y más aprendizaje verificable. Se gana capacidad de anticipación, se reducen costes de error y se acelera la iteración con evidencia. También se abren líneas de investigación aplicada: tesis, laboratorios de simulación social, pilotos con métricas de éxito claras. Le devuelvo la pregunta: ¿qué gana una empresa de automóviles con los equipos de diseño y prueba de nuevos modelos? ¿Por qué no los diseña un comité?

Pregunta. ¿Qué te gustaría que ocurriera a partir de ahora?

Respuesta. Que alguien asuma el núcleo. Pueden intentarse variados, baratos e interesantes proyectos; por ejemplo:

– Aplicar el modelo PEU a otra ciudad o área metropolitana, como diagnóstico organizativo. Traducir las variables recogidas a humanos por medidas más “mecánicas”.

– Establecer un “canvas organizativo” que permita visualizar una estructura social basada en variables macro (TC, CAG, OM…).

– Diseñar un seminario interdisciplinar (ingeniería, economía, sociología, historia, física…) sobre DOS.

– Elaborar una memoria de investigación aplicada o TFG/TFM con alguno de estos elementos.

– Crear una pequeña simulación tipo HOSS, en Python, usando tensores, con intencionalidades básicas. ¿Es posible dotar a cada ph(i,palp) de alguna “neurona”, es decir, de una mínima capacidad de aprendizaje autónomo?

Los equipos interdisciplinares pueden probar con casos piloto: desde políticas urbanas hasta procesos internos de organizaciones tipo ayuntamientos, áreas metropolitanas. Y que vayan creando un “cuerpo de prácticas” compartido, como en otras ingenierías: qué funciona, cuándo y por qué, con datos y transparencia.

Pregunta. ¿Cuál es el mayor malentendido sobre “diseño organizativo de la sociedad” que te encuentras?

Respuesta. Bueno, al menos dos malentendidos. El primero es que hay quien entiende que “organizar mejor” equivale a controlar más. No: organizar es habilitar. Se trata de alinear intenciones, recursos y reglas para que personas y equipos consigan mejores resultados con menos fricción, y hacerlo de forma evaluada, auditada y pública. El segundo es que muchos piensan que la estructura organizativa de la sociedad ha de ser una, como si perteneciera a un único partido político, sin tener en cuenta la evolución del tiempo, la tecnología o los recursos.

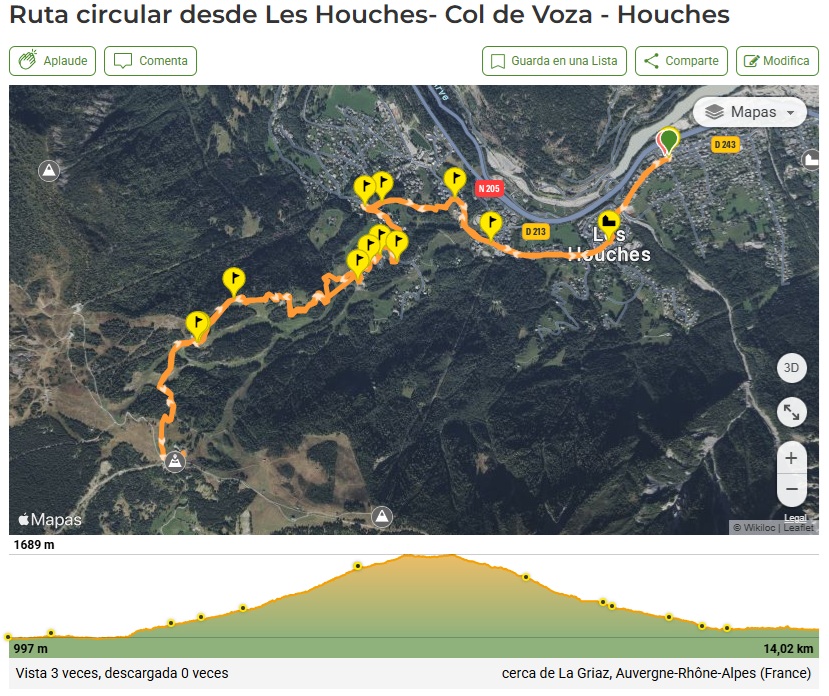

Organizado por Montañas del Mundo (MdM). Guías Marta y Vicente y la colaboración de Sergio: gracias a los tres.

Del 8 al 12 de octubre de 2025

Los trayectos en bus, confortable, excelentemente conducido por José son los que son; pero con dos paraditas (es preciso parar 45 minutos: 45 de una vez, 15 y 30 o también 30 y 15) se hacen perfectamente asumibles.

Realizamos cuatro rutas cuyos enlaces están a continuación:

Txindoki Circular (quien lo deseó, hizo cima)

Por cierto, a causa de la niebla, se acortó un poco una excursión y, en bus, nos llegamos al monasterio de S Miguel de Excelsis; personalmente, me dio ocasión de recordar mi anterior visita, junto a sus circunstancias montañeras y románicas

Dos rutas discurren por Guipuzkoa y dos por Navarra y es que la sierra de Aralar no conoce de divisiones administrativas; estas son enteramente cosa nuestra.

En total, para mí, supuso el esfuerzo necesario para superar 47,77 Km,1575+ y 1981-

Un buen grupo: puntual, correcto, amigable.

Un paisanaje y paisaje excelentes

¿Qué más se puede pedir?

El texto “Bases para una futura Ingeniería del Diseño Organizativo de la Sociedad (DOS)” ya puede adquirirse en Amazon y Libros.cc (tapa blanda).

El ensayo propone las bases conceptuales y metodológicas para avanzar hacia una disciplina capaz de analizar, diseñar y mejorar la organización de una sociedad compleja desde una perspectiva científica, tecnológica y humanista, integrando modelos, datos y métodos de ingeniería.

👉 Versión Amazon

👉 Versión Libros.cc

¡Gracias por difundir y compartir esta iniciativa!

(Imagen: cortesía de Editorial Círculo Rojo)

Le pregunto a Google.

Pregunta.-

Actualmente: conflictos armados con importante número de muertes

Respuesta.-

Algunos de los más destacados incluyen la guerra entre Rusia y Ucrania, el conflicto en Gaza y la situación en Sudán. Además, hay conflictos en curso en países como Burkina Faso, Somalia, Yemen, Myanmar y Nigeria, entre otros.

- Conflicto en Ucrania:

La invasión rusa de Ucrania, iniciada en febrero de 2022, ha generado una crisis humanitaria y un desplazamiento masivo de personas.

Busco y Según Xataka (5-junio-2025) Ucrania habría perdido entre 60.000 y 100.000 soldados.

- Conflicto en Gaza:

La guerra entre Israel y Hamás, que comenzó en octubre de 2023, ha causado una grave crisis humanitaria en la Franja de Gaza y ha escalado las tensiones en la región.

Busco y Según Wikipedia, el número de fallecidos (hombres, mujeres, niños) ascendería a 72.156

- Conflicto en Sudán:

La guerra civil en Sudán, que estalló en abril de 2023, ha provocado una grave crisis humanitaria y desplazamientos masivos de población.

- Conflicto en Etiopía:

La guerra civil en Etiopía, que involucra al gobierno y a grupos rebeldes, ha causado una grave crisis humanitaria y desplazamientos de población.

- Conflicto en Siria:

La guerra civil en Siria, que comenzó en 2011, sigue siendo una fuente de inestabilidad en la región.

- Otros conflictos:

Además de los mencionados, existen conflictos armados en países como Burkina Faso, Somalia, Yemen, Myanmar y Nigeria, entre otro

Pregunta.-

España. Cuántas convocatorias de huelga estudiantil ha habido por la invasión rusa a Ucrania?.

Respuesta.-

No hay constancia de convocatorias generales de huelga estudiantil en España específicamente por la invasión rusa de Ucrania en las fuentes consultadas

Pregunta.-

España. Cuántas convocatorias de huelga estudiantil ha habido por la desvastación israeli sobre Gaza?.

Respuesta.-

- 2 de octubre de 2025: El Sindicato de Estudiantes convocó una huelga estudiantil en toda España para denunciar la situación en Palestina. La protesta incluyó paros académicos y manifestaciones en varias ciudades.

- 27 de septiembre de 2024: El Sindicato de Estudiantes también organizó una huelga estudiantil y jornada de lucha para pedir el fin de las relaciones comerciales con Israel.

Pregunta.-

España. Cuántas convocatorias de huelga estudiantil ha habido por los conflictos de Sudan, Etiopía o Síria?

Respuesta.-

No hay constancia pública de convocatorias de huelga estudiantil en España específicamente por conflictos en Sudán, Etiopía o Siria, aunque sí se han producido huelgas estudiantiles por otros motivos, como por ejemplo una huelga general convocada en octubre de 2025 en solidaridad con Gaza

Final.-

Hoy 13 de Octubre me entero de que existe una convocatoria de Huelga General en España para el día 15; ¡alucina vecina!

Para las organizaciones convocantes de esa Super-Huelga, los acuerdos aceptados por Hamás NO son suficientes…

Sabato escribió:

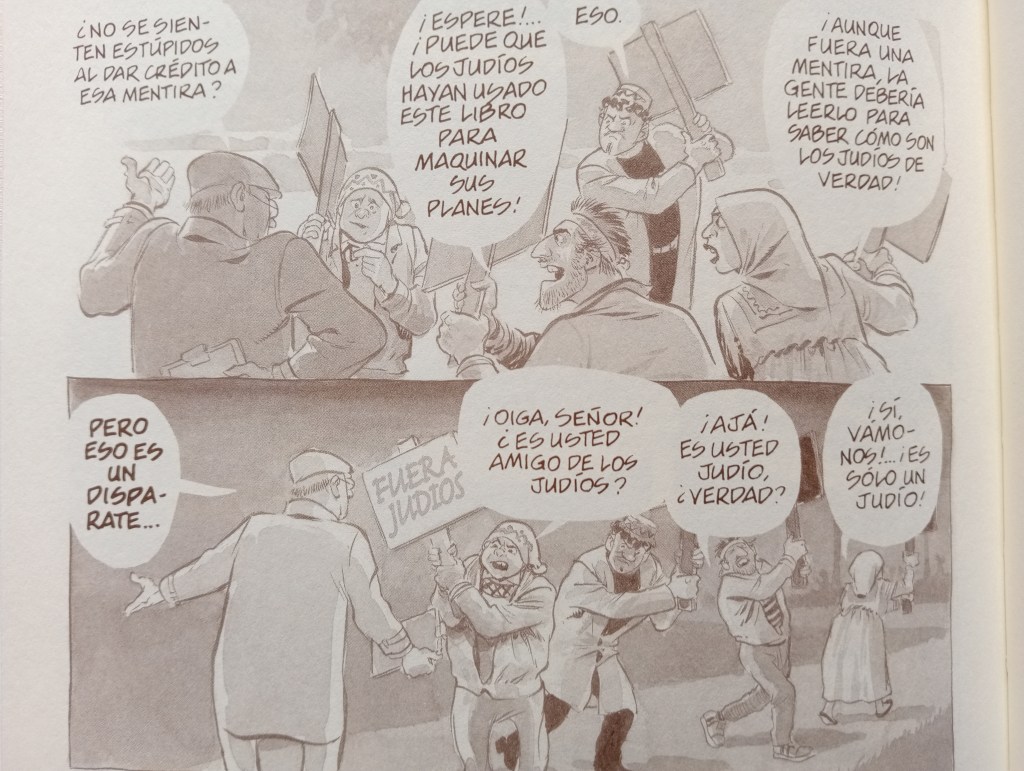

«Violando […] la lógica aristotélica, el antisemita dirá sucesivamente—y aún simultáneamente—que el judío es banquero y bolchevique, avaro y dispendioso limitado a su gueto y metido en todas partes. […] La judeofobia es de tal naturaleza que se alimenta de cualquier manera. El judío está en una situación tal que cualquier cosa que haga o diga, servirá para avivar el resentimiento infundado. Sabato, Apologías y rechazos«

He oído las preguntas, que son correctas, y he oído las respuestas que expresan la opinión de Tomás Gómez, una persona que ha tenido un papel destacado en la Federación madrileña del PSOE y por tanto en dicho partido.

Estoy de acuerdo en buena parte del análisis y comparto su pesimismo al constatar que el PSOE actual, que inició la senda con Zapatero (hoy valedor de Maduro y negociador enviado por P. Sánchez a hablar con Puigdemon), en absoluto es la socialdemocracia que ayudó a hacer la transición y nos logró nuestro lugar en la UE; el PSOE actual me parece otra «cosa» que mantiene un decorado, presidido por las siglas, simplemente para recoger votos de quienes identifican la marca con la idea que ellos tiene de dicha marca y que no corresponde con la realidad actual

Si hay un Centro político claro, pienso que el PSOE seguirá el camino de sus homólogos franceses y compañía.

¿Y aquellas personas afiliadas al PSOE que comienzan a estudiar un posible recambio de Sánchez?. Solamente las urnas harán que Sánchez deje de presidir el «bloque de investidura» y eso lo saben perfectamente Bildu, Junts, PNV, Sumar, IU, Podemos… y Sánchez, por supuesto. Es posible que el apoyo a Sánchez de Bildu, Junts, Sumar… sea más fuerte que el apoyo de los votantes del Psoe a dicho partido. ¿Por qué no mantener esta situación hasta el año 2031?. ¿ Nadie estará pensando en construir un relato que retrasara las elecciones más allá del 27, manteniendo dicho «bloque de investidura»; un relato justificado por una intervención armada, por un recrudecimiento de la guerra híbrida que tanto le gusta a Putin, por una pandemia, por un conejo blanco… poniendo como telón de fondo el bien supremo del pueblo, el informe favorable del Constitucional presidido por el Sr. Pumpido.

Nooooo, nadie lo está pensando.

Pero, a qué tanto miedo a convocar elecciones; encuesta tras encuesta, el CIS de Tezanos señala un remonte del PSOE y además una persona que ha votado al PSOE treinta veces (o más: europeas, estatales, autonomicas, municipales), ¿a quién va a votar ahora?. La mayoría seguirá votando al PSOE. Pero, algunos irán a la abstención -no muchos- , otros emplearán el pito pito gorgorito -no muchos-, otros a Podemos -no muchos-, otros al PP -no muchos-, otros a VOX -no muchos-, otros a Ciudadanos -no muchos-, otros a Compromis -no muchos-,otros a ERC – no muchos-… ¿Cuántos diputados suman los «no muchos»?: siete diputados menos (por ej). Mal rollo.

Una cosa es el relato y otra el olfato.

Si parte del PSOE piensa que es posible y deseable ir preparando una alternativa interna a Sánchez, ya puede ir haciendo uso del voto de los diputados comprometidos en ese ejercicio de prospección… o no será creible. Y si TODOS los diputados actuales tienen como Bien supremo al partido y como segundo su empleo, ¿que credibilidad van a tener sus críticas -fundadas o no-?

En fin, este post ve la luz el 3 de Octubre y los Presupuestos siguen sin presentarse por tercer año consecutivo, ¿No es suficiente motivo para convocar elecciones? Ya se que el Presidente dice que incumplir la Constitución no es tan grave, España va bien -no me digan como, pero el paro ha bajado- y es el faro del mundo (Presidente, ¿por qué no escucha lo que usted le decía a Rajoy?).

¿No hay siete diputados del PSOE que estén dispuestos a presionar para un adelanto electoral? ¿Lo harán si NO se convocan elecciones en el 2027?

Pienso que el PSOE seguirá el camino de sus homólogos (bis)…Si hay un Centro político claro.

Nota.- Art 134 de la Constitución, apartado tercero: El Gobierno deberá presentar ante el Congreso de los Diputados los Presupuestos Generales del Estado al menos tres meses antes de la expiración de los del año anterior.

¿Quo vadis PSOE?

Soy firme partidario de que se precisa una organización global que minimice los conflictos (con el horizonte de superar esa forma de «resolución de problemas»); podría ser la ONU, podría ser.

Hace escasos días, parece ser que Zelenski dijo en la ONU: «Con instituciones débiles, las armas deciden quién sobrevive». Estoy de acuerdo con él

Putin, mediante votación, es quien dirige, desde hace la pila de años, a ese gran Estado que es Rusia; conocemos de su afán por la guerra que, hace ya años (no la primer hazaña), se mostró en Crimea y más recientemente con la invasión (bis) de Ucrania; así, por su cara bonita. Rusia tiene un puesto en la ONU con derecha a veto.

¿Qué puede hacer la ONU?. Es obvio que necesita reorganizarse y buscar un equilibrio.

Actualmente, con el tema de Gaza en cartera, ¿cuál será de firme determinación que adoptará la ONU?. Nada, aparte, claro está, de favorecer el «postureo»

Tal como está la ONU, uno de sus grandes logros es tener de Secretario al socialista A. Guterres, bien aferradito al cargo.

En fin, una organización global es necesaria y puede ser la ONU, una ONU reformada aplicando el sentido común, buscando ejercer un par de competencias, no más … si me apuran: una sóla competencia

Si eso no ocurre, ya lo ha dicho Zelenski magistralmente: «Con instituciones débiles, las armas deciden quién sobrevive»

Fuimos con Alventus a los Dolomitas; hicimos centro en Pecol y de allí seguimos seis rutas:

Cada ruta tiene su atractivo, su desafio. Según la Wikiloc+MiMóvil, el resumen es: Unos 74 Km con 3.308 m de desnivel positivo y unos 3.837 m de desnivel negativo; Las rutas están entre los 1467 y los 2451 ( obviamente, no se tiene en cuenta el funivía Sass Pordoi 2.950 que, sin embargo, es una atracción en sí mismo y también puede ser punto de partida – hay zetas y vías por si se prefieren otras modalidades sin pillar el funicular-)

Los Dolomitas no se agotan en lo que hemos visto y caminado, es una región extensa, con altas y míticas cumbres, afiladas agujas, grandes bosques, reparadores refugios…

Los guias David y Jorge, números Uno: siempre pendientes y sobre terreno geográfico y humano conocido.

Intensos días

(Nota.- seis noches en la montaña -Pecol- y dos noches en Venecia)

IA de Google:

«Un estado fallido es aquel que ha perdido la capacidad de ejercer sus funciones básicas, como mantener el orden, proveer seguridad y servicios a sus ciudadanos, o hacer cumplir las leyes. El término se utiliza para describir a países que sufren de crisis políticas, económicas y sociales profundas, a menudo con violencia generalizada y colapso de las instituciones. !

Presupuestos, Gestión DANA, Gestión incendios, vivienda…

Pedro Calderón de la Barca (1600-1681), La vida es sueño (1635)

Jornada 3, escena 19 (monólogo de Segismundo)

SEGISMUNDO:

Es verdad, pues: reprimamos

esta fiera condición,

esta furia, esta ambición,

por si alguna vez soñamos.

Y sí haremos, pues estamos

en mundo tan singular,

que el vivir sólo es soñar;

y la experiencia me enseña,

que el hombre que vive, sueña

lo que es, hasta despertar.

Sueña el rey que es rey, y vive

con este engaño mandando,

disponiendo y gobernando;

y este aplauso, que recibe

prestado, en el viento escribe

y en cenizas le convierte

la muerte (¡desdicha fuerte!):

¡que hay quien intente reinar

viendo que ha de despertar

en el sueño de la muerte!

Sueña el rico en su riqueza,

que más cuidados le ofrece;

sueña el pobre que padece

su miseria y su pobreza;

sueña el que a medrar empieza,

sueña el que afana y pretende,

sueña el que agravia y ofende,

y en el mundo, en conclusión,

todos sueñan lo que son,

aunque ninguno lo entiende.

Yo sueño que estoy aquí,

destas prisiones cargado;

y soñé que en otro estado

más lisonjero me vi.

¿Qué es la vida? Un frenesí.

¿Qué es la vida? Una ilusión,

una sombra, una ficción,

y el mayor bien es pequeño;

que toda la vida es sueño,

y los sueños, sueños son.

La hipótesis de la simulación, argumento de simulación o simulismo, propone que toda la existencia es una realidad simulada, como una simulación computarizada.[1][2][3] Esta simulación podría contener mentes conscientes que podrían saber o no que viven dentro de una simulación. Esto difiere bastante del concepto tecnológicamente realizable de realidad virtual, fácilmente distinguible respecto de la experiencia de la realidad actual. La realidad simulada, por el contrario, sería difícil o imposible de separar de la supuesta realidad «verdadera». Mucho se ha debatido sobre este tema, desde el discurso filosófico hasta las aplicaciones prácticas en informática.

Popularizada por Nick Bostrom en su concepción actual,[4] se parece a varios otros escenarios escépticos de la historia de la filosofía. La idea de que tal hipótesis es compatible con todas las experiencias perceptivas humanas se considera que tiene importantes consecuencias epistemológicas en ese terreno.

La hipótesis desarrolla la característica del dios maligno de René Descartes, pero la lleva más allá por analogía en una realidad simulada futura. La misma tecnología ficticia aparece, en parte o totalmente, en películas de ciencia ficción como Star Trek, Dark City, The Thirteenth Floor, Matrix, Abre los ojos, Vanilla Sky, Total Recall, Inception y Source Code.[5]

La hipótesis popularizada por Bostrom es muy discutida con, por ejemplo, la física teórica Sabine Hossenfelder, quien la calificó de pseudociencia,[6] y el cosmólogo George F. R. Ellis, quien afirmó que «[la hipótesis] es totalmente impracticable desde un punto de vista técnico» y que «los protagonistas parecen haber confundido la ciencia ficción con la ciencia. Una discusión nocturna de pub no es una teoría viable».[7]

Virginia GómezMadrid

Actualizado Sábado, 9 agosto 2025 – 00:25

Hay que alejarse del circuito habitual de las letras y desplazarse hasta el Barrio del Pilar para descubrir la librería más fantástica de Madrid. Mimetizados entre más de 60.000 libros distintos, allí están la casa de Bilbo y Frodo, el edificio del Daily Planet y el simpático R2-D2. También una biblioteca japonesa con sus farolillos y sus neones de color, una catedral gótica con gárgoras con forma de Yoda o Stich y vidrieras de Asterix y Obélix, y hasta el submarino Nautilus de Julio Verne. El proyecto de la familia Marugán, Akira Cómics (Avenida Betanzos, 74), es una rara avis en el mundo de los libreros, pero gracias a acento tan particular ha sabido encontrar su hueco en el mundo. Y no uno cualquiera. Acaban de recibir en la Comic-Con de San Diego (EEUU), por segunda vez, el mayor premio de la industria a nivel mundial, el Eisner Spirit of Comics. Un reconocimiento que no hace más que dar alas a los hermanos Jesús e Iván -hoy a los mandos-, para seguir agrandando el proyecto.

Aunque sí hay que algo que ha hecho destacar su nominación para alcanzar el título de mejor librería del mundo, batiendo a los otros 99 candidatos, no es sólo la exótica decoración de su tienda, sino esa labor didáctica que realizan con los más pequeños, creando, a través de diversas actividades, un batallón de jóvenes lectores que asegure el futuro comiquero.

«Nunca pensamos que llegaríamos tan lejos», cuenta Jesús sobre la librería, que nació en el año 93 como un proyecto familiar. «Mis padres querían crear un negocio y nos preguntaron a mi hermano y a mí si teníamos alguna idea. Como somos súper frikis de esto, propusimos una librería de cómic«, detalla el librero. «Mi padre hizo un análisis de mercado y no había nada similar, así que aceptó. Aunque ellos habían barajado abrir un bar, un taller mecánico, una panadería…», añade junto a su hermano al terminar de abrir unas cajas con las novedades que reciben cada semana.

Arrancaron -con el nombre de la película que trajo el manga a Occidente (Akira)- en un local de apenas 25 m2 que hoy se ha multiplicado hasta los 1.080 m2 -es el de mayor tamaño de Europa-, atrapando las mejores ideas que han visto a lo largo de los años por el mundo. Porque ambos hermanos viajan de forma continua intercambiando experiencias con libreros de todos los rincones. Y poco a poco, en especial desde que lograron ese primer Oscar del cómic en 2012, han ido transformando la tienda hasta convertirla en un paseo por el mundo a la medida de la ficción.

«Un premio puede ser el final de un camino o el principio de otro», dice Jesús. Y en la segunda premisa se centraron ellos. Pisaron el acelerador, señala, para crear la primera aplicación móvil con venta del sector, pusieron en marcha cuentacuentos e iniciaron la mutación del establecimiento.

Uno de los espacios de Akira Cómics, con el árbol de Tolkien.ELENA IRIBAS

Hoy sus cómics están clasificados por temáticas para facilitar la búsqueda a los menos expertos, pero también por lugares que puedan orientar a los lectores que allí se adentren. En función de la decoración, uno puede adivinar si se encuentra ante los cómics de América (al ver la casa del Señor de los Anillos y los superhéroes), los de Europa (a través de esa especie de catedral) o los de Japón (donde lucen gatos de la suerte entre cómics manga), cada uno con expositores propios de cada lugar. Una clasificación que puede parecer normal, pero según Jesús, no lo es. «En las librerías de cómic normalmente, todo está ordenado por editoriales», explica. «Somos unos bichos raros», reconoce Jesús

Además de todo eso, en Akira también hay espacio para la literatura de todos los géneros y los libros infantiles, que se exhiben entre paredes que recuerdan a una biblioteca victoriana de finales del siglo XIX con guiños al Nautilus -el submarino que creó Julio Verne-, los árboles mágicos que ideó J.K.Tolkien o la gigantesca planta de Jack y las habichuelas mágicas.

«El diseño de la decoración es mía», señala Jesús, que incluso ha pintado a mano algunos de los elementos que visten la tienda. «Cada uno nos encargamos de unas cosas», dice en referencia a su hermano, pendiente, entre otras cosas, de los números del negocio, que también tiene su parte de museo.

Un traje de Darth Vader hecho a la medida de Jesús, gran fan de Star Wars, luce, por ejemplo, en una de las vitrinas. «Iba con él a los estrenos en Kinépolis. Álex de la Iglesia me vio una vez por la tele y contactó conmigo para que se lo prestase para la película de La Comunidad (2000)», recuerda el librero.

No es el único tesoro con historia en este lugar, donde también realizan visitas guiadas. En una de las salas del local, se hallan dibujos originales de los más conocidos cómics. Tenemos un original de Watchmen del que existen muy pocos en el mundo. Es nuestra Gioconda. Y eso nos atrae a turistas de todo el mundo», asegura Jesús en la sala museo que crearon en 2014 y donde realizan actividades y cuentacuentos para los más pequeños. Ese proyecto de «sostenibilidad lectora», dice, es el que les ha valido este último premio. «Estamos haciendo cantera de lectores. Para los niños de 4 a 8 años tenemos cuentacuentos; para los de 10 a 12, actividades de dibujo y charlas; para los adolescentes, charlas de motivación y orientación; para los que están estudiando grados, de orientación profesional», describe.

«Para que te den un segundo premio tienen que pasar al menos 10 años o que hayas cambiado algo», detalla el librero. De 2012 a ahora, Akira no tiene nada que ver. Dentro de una década, quizá tampoco. «La tienda nunca estará terminada, completa. Siempre estamos haciendo algo nuevo. Ya nos hemos acostumbrado a las obras», añade el librero. Lo próximo, será terminar de construir la catedral para que sus clientes alucinen con tan sólo abrir la puerta.

Me envió un amigo un email sobre el PSOE actual, cuyo Secretario es Pedro Sánchez. En él me dice lo que “perderíamos” si él dejara de ser Presidente del Gobierno; por ejemplo:

- La indexación de las pensiones

- Las ayudas al transporte para determinados colectivos

- El salario mínimo bajaría

- etc

- Y por último, la Sanidad, la Educación…se harían de pago 100%.

Esta persona hizo unas “oposiciones”, pero ello no le lleva a formularse pregunta alguna sobre determinadas afirmaciones que realiza: transmite el “breviario” sin pensar, le suena la música

¿Si Sánchez dimite y gana el PP unas elecciones, la Sanidad, la Educación…se haría de pago?. Esta frase es una perla. De entrada, la Sanidad y la Educación públicas, vienen de la dictadura (aunque se hayan modificado y extendido), de salida, 11 comunidades autónomas están gobernadas hoy día por el PP y hay competencias exclusivas. La afirmación de mi amigo es una mezcla de adivinación con fuck news.

Sin duda, cuando hay cambios de gobierno, debe haber tentativas de modificaciones dentro de unos márgenes que, en líneas generales, deben estar marcados por la Constitución

No sigo comentando el lastimero llanto de mi amigo si dimite Sánchez por cuestiones de espacio y porque pienso que plantearse la dimisión de Sánchez es pura ficción; alguien le apodó “Don Teflón” y pienso que es una suerte de metáfora muy adecuada. Puede estar 4 años con presupuestos prorrogados sin oír sus audios en la época Rajoy. ¡Que vergüenza!

¿Quiere decir lo anterior que TODO lo que ha impulsado el actual Gobierno está rematadamente mal?. NO; de hecho hay varias e importantes cuestiones, apuestas arriesgadas (ej: amnistia) cuyos efectos aún están en el aire, no se sabe cuales serán sus implicaciones futuras para el camino que tome España como Estado: personalmente no podría decir si serán éxitos o fracasos

¿Quiere decir lo anterior que TODO lo que ha impulsado el actual Gobierno está rematadamente BIEN?. NO; de hecho, lo relacionado con las interpretaciones, los cambios de opinión, las necesidades y virtudes, la aplicación continuada de la «ley del embudo» en un contexto «guerra-civilista desfasado»…me cansan, me enfadan y aburren.

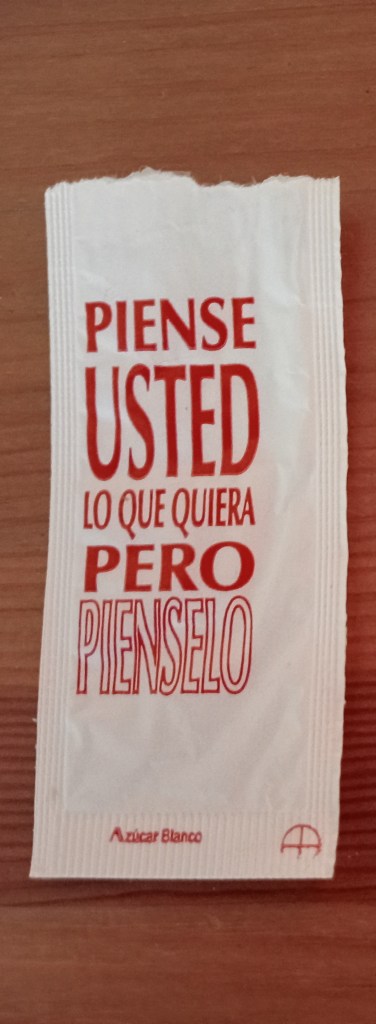

Pero que sepa mi amigo, que sin duda representa un buen porcentaje de votantes del actual PSOE, que puede pensar y decir lo que quiera (entiendo que sin ofender a los demás) y, a ser posible, le agradecería observara este consejo de café mañanero:

Por otra parte está la increible historia de la «neolengua», la «postverdad», la «demagogia», las «Fuck news»… y demás conceptos relacionados.

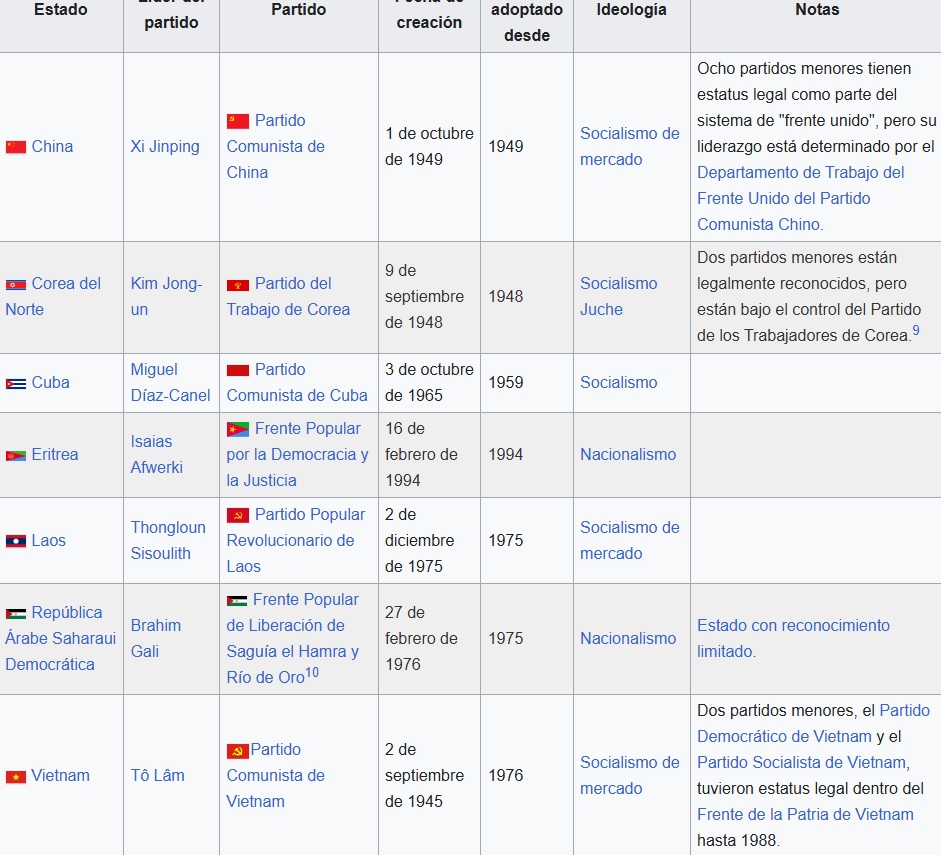

Me enviaron un videl del rector de una Universidad de EEUU que tiene problemas con Trump; hablaba de la neolengua y citó a Orwell. Hace muchoooooo tiempo, Orwell alertó sobre la neolengua, como algo que estaban haciendo los «camaradas» de la granja. Lo que se llamaba izquierda demostró su habilidad para crear nuevos conceptos y pura neolengua. Esta actitud se la han copiado populistas de todos los lados del espectro político ( supongo que Trump entre ellos). No hay que quejarse por ello: juegan con parecidas artimañas, aunque están lejos de alcanzar la producción «neolenguista de la izquierda»; por ejemplo, supongamos que estamos de acuerdo en que un estado dónde exista partido único es totalitario, es una dictadura, nos situamos en el tiempo actual y vamos a Wikipedia cuyo articulo recomiendo leer entero:

Y ahora crucemos estos datos con el IDH (Índice de Desarrollo Humano calculado por el PNUD) ¿En que puesto están?:

China: puesto 78

Corea del Norte: No calculado por el PNUD

Cuba:puesto 97

Eritrea:puesto 178

Laos:puesto 147

Vietnam: puesto 93

Para hacernos una idea España está en el puesto número 28 y los seis primeros puestos son para:

Islandia, Suiza, Noruega,Dinamarca, Suecia, Alemania

Hace falta mucha neolengua para tragarse ver esos hechos y estar integrados en partidos comunistas o filocomunistas.

Respecto a los temas de corrupción (1), pues lo de siempre: parece un problema endémico en nuestro país y dudo se resuelva con leyes permisivas y procedimientos inacabables pues como dice el «consejo del café mañanero»:

Si lo anterior lo tomas al pié de la letra,¿que es lo que harán los aspirantes a manguis?; envolverse en valores hasta que el precio que puedan lograr esté a la altura de sus aspiraciones; aunque, pensandolo de otra forma, puede ocurrir que la oferta del tentador, en ESTA ocasión, sea lo suficientemente alta como para tenerla en cuenta…

«y le dijo: Todo esto te daré, si postrado me adorares. 10 Entonces Jesús le dijo: Vete, Satanás, «

Son temas antiguos y el problema permanece; alguna dificultad debe tener su resolución…

Por cierto, Cuando tengan que votar la financiación «singular» para Cataluña junto con la Agencia de recaudación propia (¿Habrá 17 Agencias?) -si es que se tiene que votar, que no lo se-, ¿no habrá al menos 10 -por ej- diputados socialistas que tengan que ausentarse al bar a tomar un café? ¿Acaso esas dos medidas salen de un Congreso del PSOE?.

«Si en Sodoma y Gomorra hubiera diez personas justas, Dios no habría destruido las ciudades por amor a esas diez personas, según la historia bíblica de Génesis 18. «. No hubo esas 10 personas a lo que parece; me sospecho que, postureos aparte, no habrá esos 10 diputados

Para sonreir un ratito: escepticismo o barbarie

(1) Sobre Koldo, Cerdan, Fontanera… supongo informados al personal por lo reciente. Sobre el reciente «caso Montoro», copio y pego un breve texto, ahí va:

«La corrupción no entiende de partidos. Son muchos los casos que, últimamente, afectan al Gobierno del PSOE, pero tampoco son pocos los relacionados con el PP. El último afecta de lleno al exministro de Hacienda Cristóbal Montoro, que está siendo investigado por la Justicia por otorgar presuntos beneficios legislativos a determinadas empresas a través de Equipo Económico, un despacho que fundó antes de entrar en el Gobierno.

La esencia de este tipo de escándalos es siempre la misma, una combinación de favoritismo, nepotismo y tráfico de influencias para tratar de sacar tajada aprovechándose del ejercicio de un cargo público. Esto evidencia que la corrupción en España es endémica y transversal, de modo que afecta a todos los colores políticos en mayor o menor medida.

Sin embargo, al margen del resultado final que arroje la instrucción, lo más trágico de este caso es el personaje en sí, ya que Montoro ha sido de lejos el peor ministro de Hacienda de la historia de España. Disparó los impuestos como nunca antes, adelantando por la izquierda a socialistas y comunistas, al tiempo que quebró la seguridad jurídica y emprendió una persecución sin cuartel contra los contribuyentes, hasta el punto de usar la Hacienda pública como un cortijo particular a fin de amedrentar y castigar voces críticas contra él o su Gobierno.

El tiempo da y quita razones, poniendo a cada uno en su sitio. Montoro pasará a la historia como un político siniestro y tremendamente nefasto para España, así que tanta paz lleves como descanso dejas…

Una vez más se demuestra que lo importante no son las siglas ni los colores políticos, sino las ideas. El PP de Rajoy poco o nada tiene que envidiar al PSOE de Sánchez.

MANUEL LLAMAS.

DIRECTOR DEL IJM»

Al respecto tb parece bastante ilustrativo escuchar el Monologo de Alsina del 17/08/2023: «Mal día para Montero, peor para Montoro»

Difícil es el alba.

Difícil ascender en el camino

creando el mediodía.

Al declinar la luz hacia la tarde

se abandona el esfuerzo:

nadie puede luchar contra la noche,

sólo queda aceptarla.

Difícil es vivir mientras se asciende

creando el mediodía,

sin declinar jamás hacia la tarde

ni rendirse a la noche.

Permanezcamos siempre amaneciendo.

Sea ascenso el camino.

La nuestra es la ardua luz de lo que nace

creando el mediodía.

La nuestra es siempre la virtud del alba.

Mario Míguez

Pedro Sánchez, no te lo digo en broma; Cualquier cosa antes que convocar elecciones… esto es una idea…

¿Y si vas allí para promover una Alianza por la Paz, la Justicia y el Feminismo?. Es perfectamente Constitucional

Inicié este blog hace años con dos fines u objetivos:

- Comunicar a mis amigos/as, compañeros de trabajo, de organizaciones… que estaba haciendo en mi tiempo libre ( más o menos); me ahorraba sus preguntas y les ahorraba mis respuestas (a veces, ¡harto pesadas!)

- Dejar claro que abogo por una unidad organizativa humana que se ocupe de los aspectos básicos de los seres humanos; de ahí “onuglobal.com”; deseo que, obviamente, tiene muchos matices